How data engineers tame Big Data?

Dataconomy

FEBRUARY 23, 2023

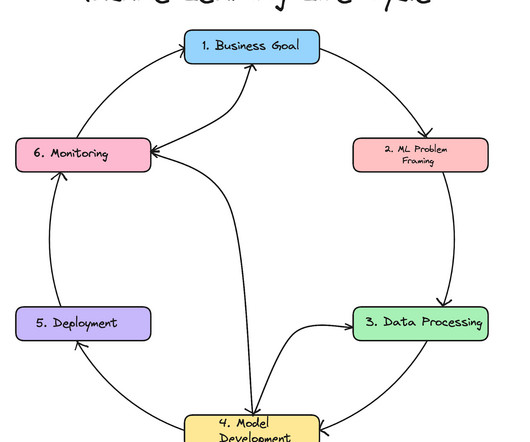

This involves creating data validation rules, monitoring data quality, and implementing processes to correct any errors that are identified. Creating data pipelines and workflows Data engineers create data pipelines and workflows that enable data to be collected, processed, and analyzed efficiently.

Let's personalize your content