7 Ways to Avoid Errors In Your Data Pipeline

Smart Data Collective

DECEMBER 28, 2022

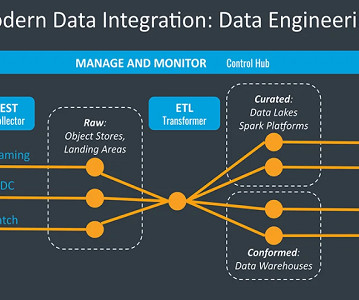

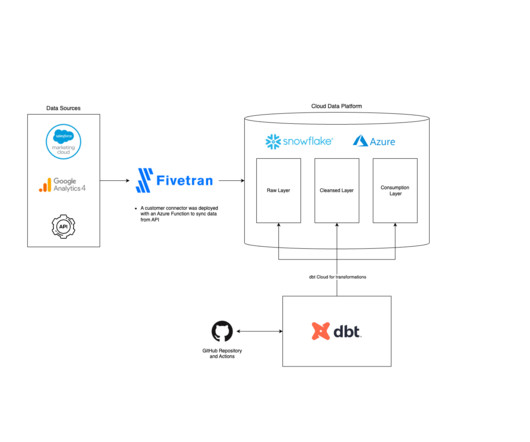

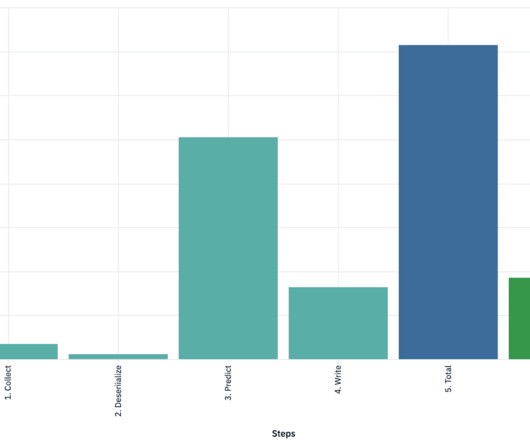

A data pipeline is a technical system that automates the flow of data from one source to another. While it has many benefits, an error in the pipeline can cause serious disruptions to your business. Here are some of the best practices for preventing errors in your data pipeline: 1. Monitor Your Data Sources.

Let's personalize your content