Balancing Innovation and Ethics: AI and LLMs in Modern Sales Engagement

insideBIGDATA

OCTOBER 17, 2024

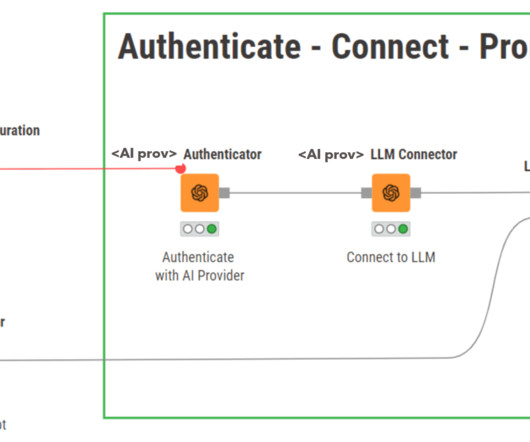

In this contributed article, Logan Kelly, Founder and President of CallSine, discusses how LLMs and AI have combined to boost sales teams’ outreach. These revolutionary advancements are changing sales engagement by delivering remarkable efficiency and personalization capabilities. However, they also come with challenges that organizations must face when taking advantage of these incredible tools.

Let's personalize your content