The Tale of Apache Hadoop YARN!

Analytics Vidhya

MAY 31, 2022

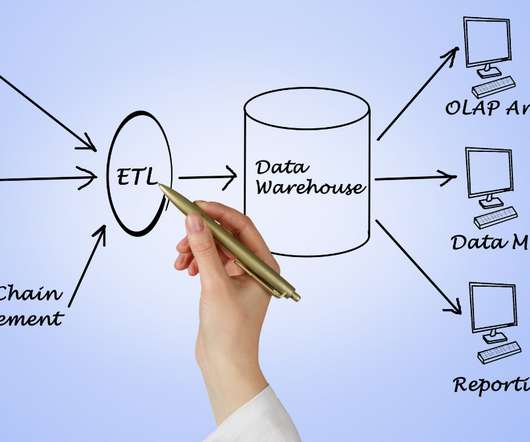

The post The Tale of Apache Hadoop YARN! Initially, it was described as “Redesigned Resource Manager” as it separates the processing engine and the management function of MapReduce. Apart from resource management, […]. appeared first on Analytics Vidhya.

Let's personalize your content