Boosting RAG-based intelligent document assistants using entity extraction, SQL querying, and agents with Amazon Bedrock

AWS Machine Learning Blog

DECEMBER 6, 2023

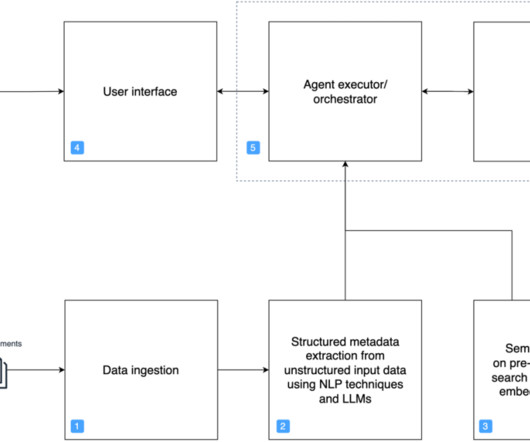

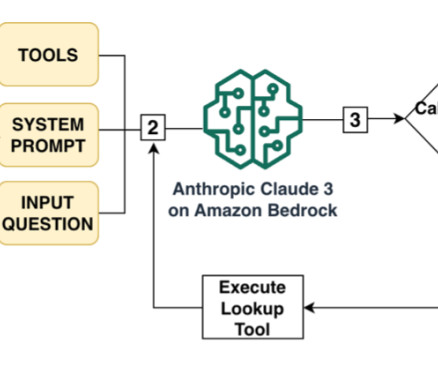

Overview of RAG RAG solutions are inspired by representation learning and semantic search ideas that have been gradually adopted in ranking problems (for example, recommendation and search) and natural language processing (NLP) tasks since 2010. But how can we implement and integrate this approach to an LLM-based conversational AI?

Let's personalize your content