Real value, real time: Production AI with Amazon SageMaker and Tecton

AWS Machine Learning Blog

DECEMBER 4, 2024

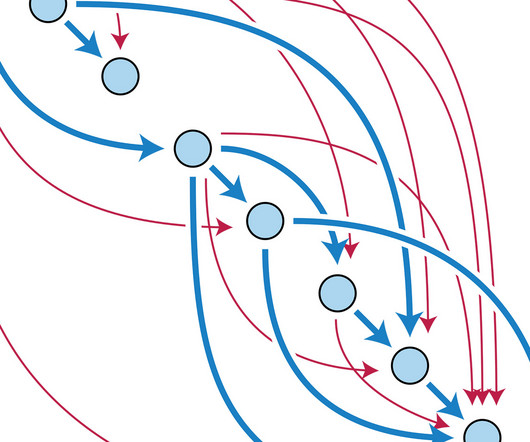

This framework creates a central hub for feature management and governance with enterprise feature store capabilities, making it straightforward to observe the data lineage for each feature pipeline, monitor data quality , and reuse features across multiple models and teams. This process is shown in the following diagram.

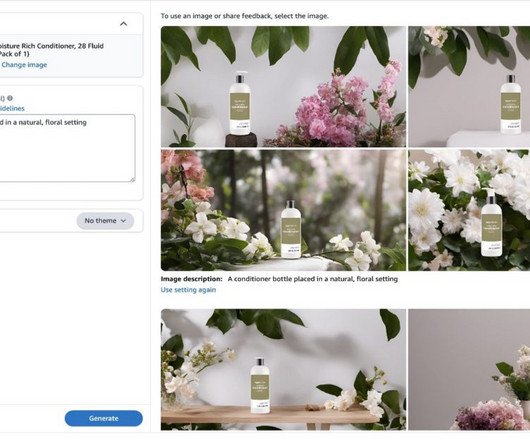

Let's personalize your content