Data pipelines

Dataconomy

JUNE 3, 2025

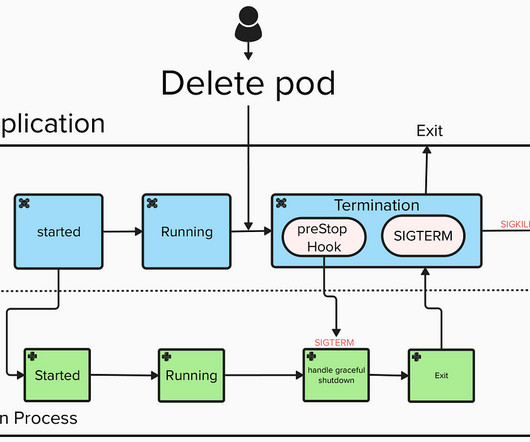

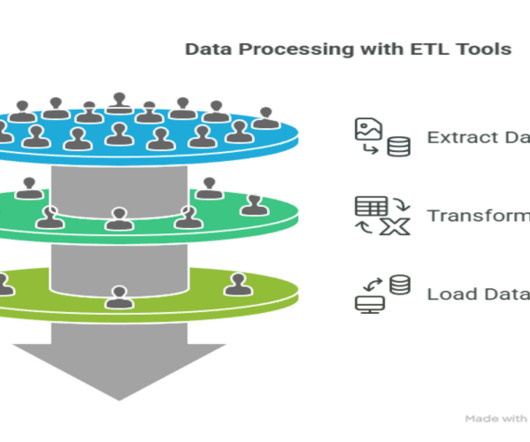

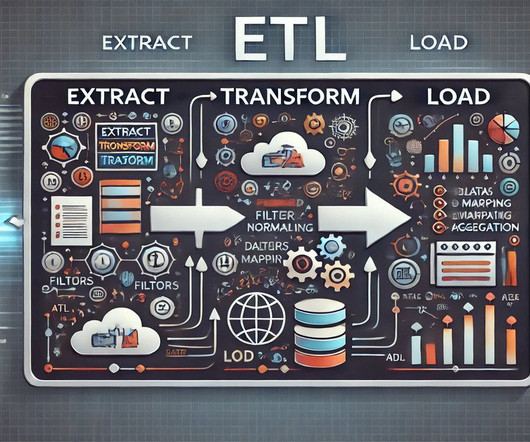

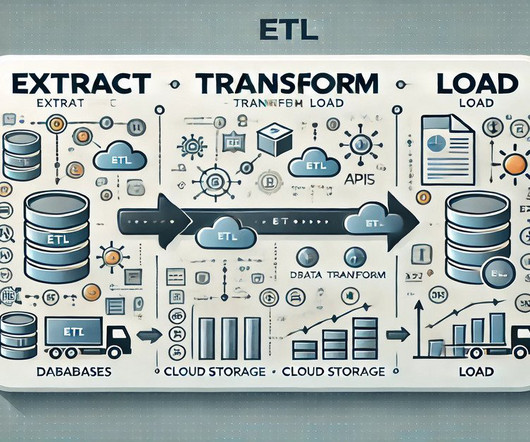

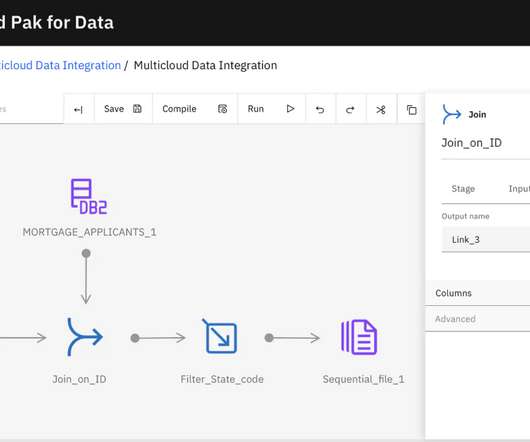

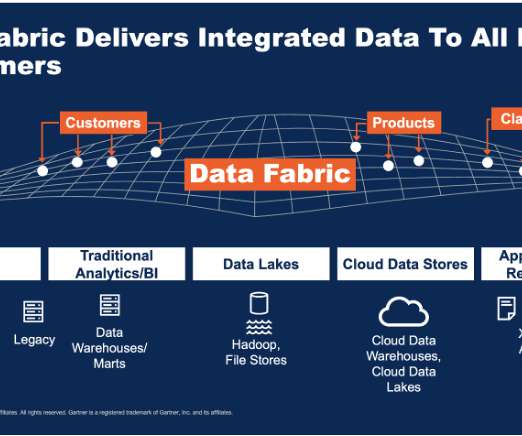

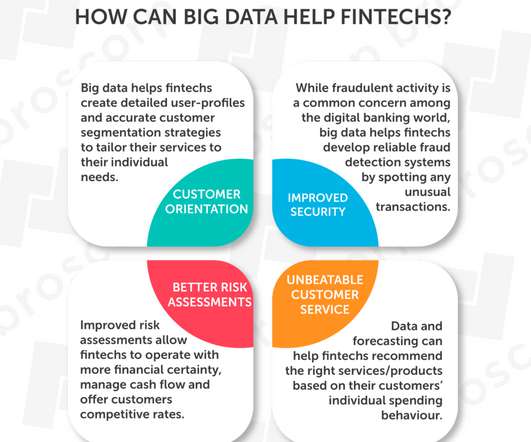

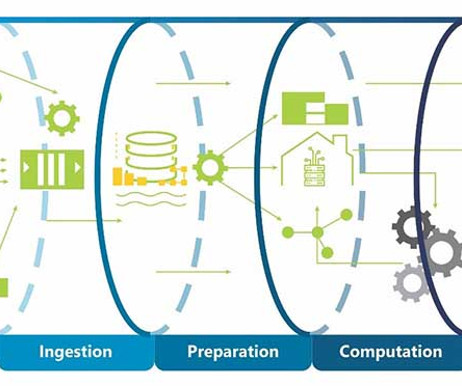

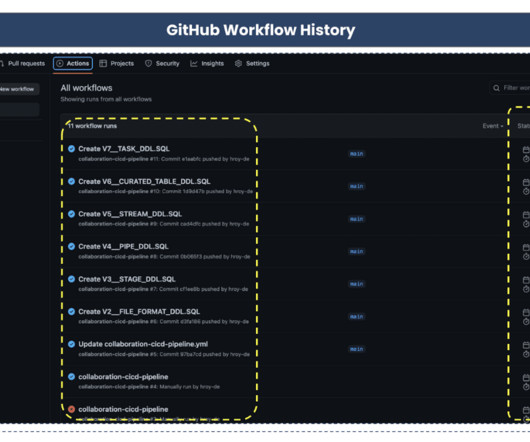

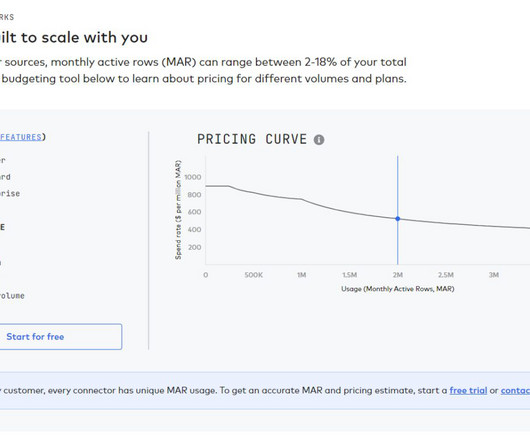

Data pipelines are essential in our increasingly data-driven world, enabling organizations to automate the flow of information from diverse sources to analytical platforms. What are data pipelines? Purpose of a data pipeline Data pipelines serve various essential functions within an organization.

Let's personalize your content