Build a Scalable Data Pipeline with Apache Kafka

Analytics Vidhya

MARCH 10, 2023

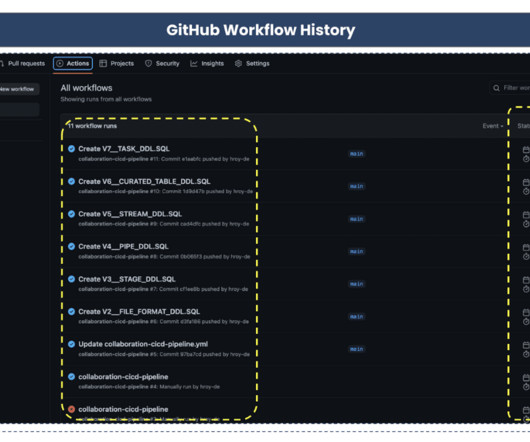

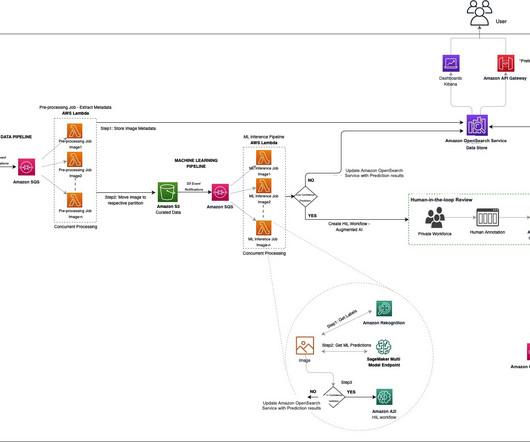

Kafka is based on the idea of a distributed commit log, which stores and manages streams of information that can still work even […] The post Build a Scalable Data Pipeline with Apache Kafka appeared first on Analytics Vidhya. It was made on LinkedIn and shared with the public in 2011.

Let's personalize your content