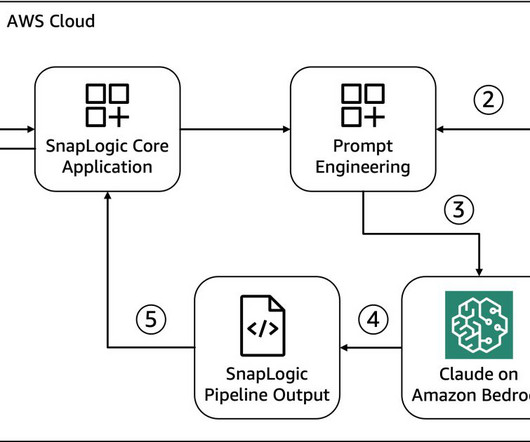

How SnapLogic built a text-to-pipeline application with Amazon Bedrock to translate business intent into action

NOVEMBER 24, 2023

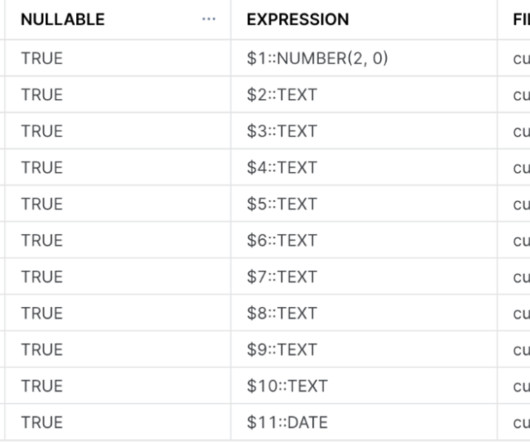

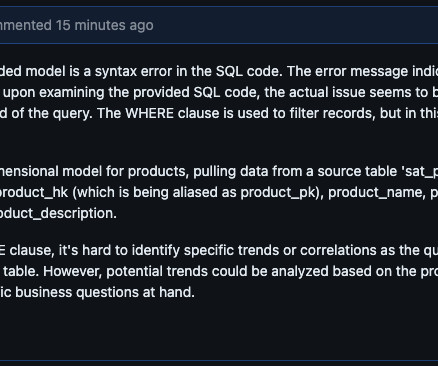

Iris was designed to use machine learning (ML) algorithms to predict the next steps in building a data pipeline. Let’s combine these suggestions to improve upon our original prompt: Human: Your job is to act as an expert on ETL pipelines.

Let's personalize your content