What is Data Pipeline? A Detailed Explanation

Smart Data Collective

OCTOBER 17, 2022

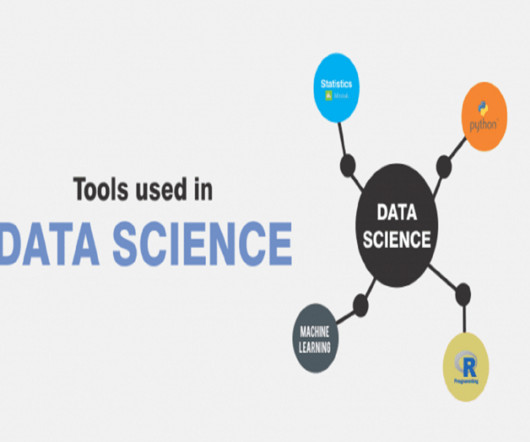

Data pipelines automatically fetch information from various disparate sources for further consolidation and transformation into high-performing data storage. There are a number of challenges in data storage , which data pipelines can help address. Choosing the right data pipeline solution.

Let's personalize your content