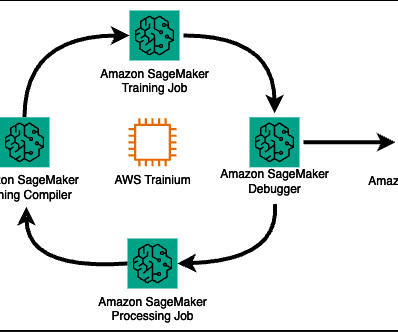

PEFT fine tuning of Llama 3 on SageMaker HyperPod with AWS Trainium

AWS Machine Learning Blog

DECEMBER 24, 2024

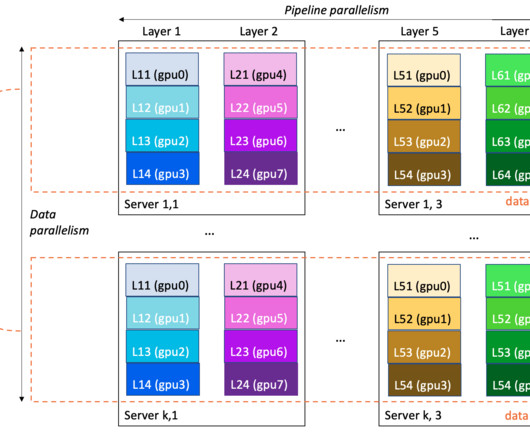

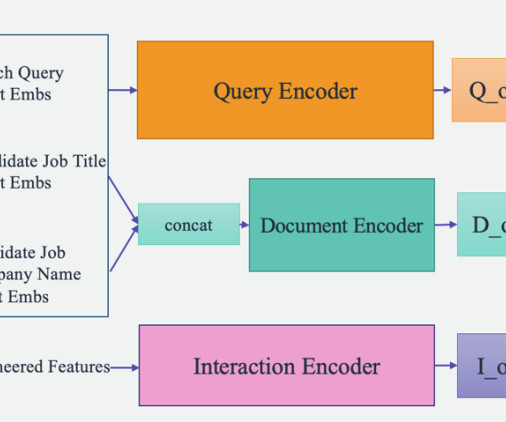

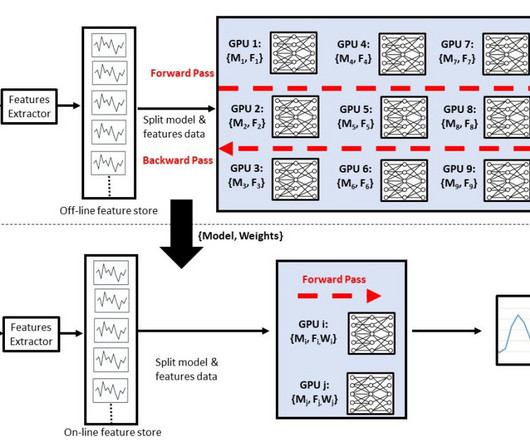

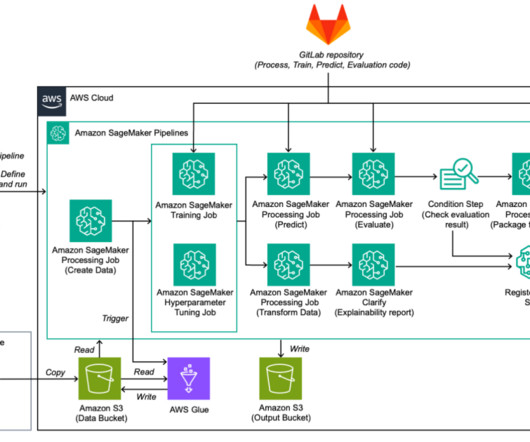

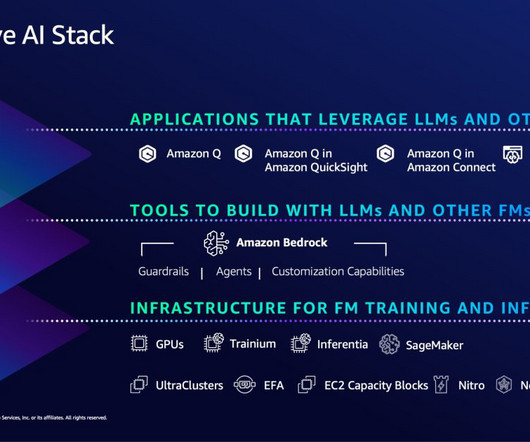

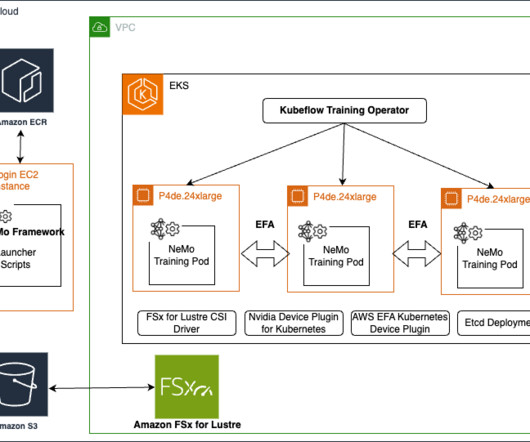

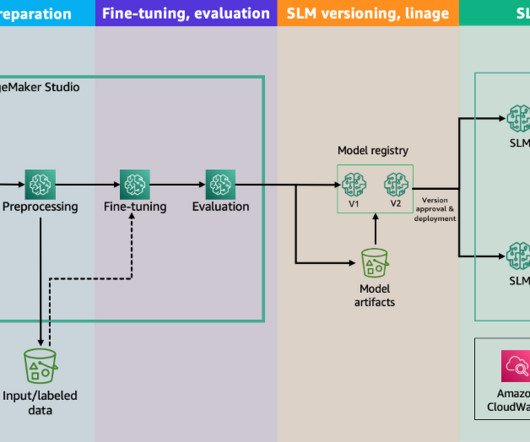

The process of setting up and configuring a distributed training environment can be complex, requiring expertise in server management, cluster configuration, networking and distributed computing. Scheduler : SLURM is used as the job scheduler for the cluster. You can also customize your distributed training.

Let's personalize your content