Build a Serverless News Data Pipeline using ML on AWS Cloud

KDnuggets

NOVEMBER 18, 2021

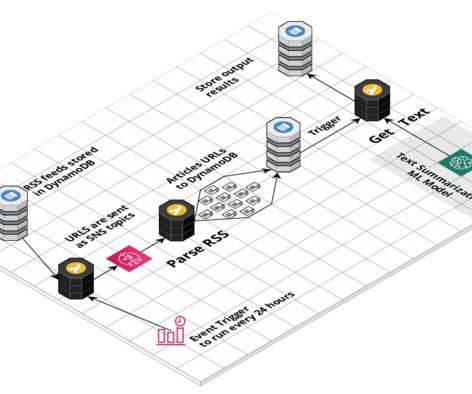

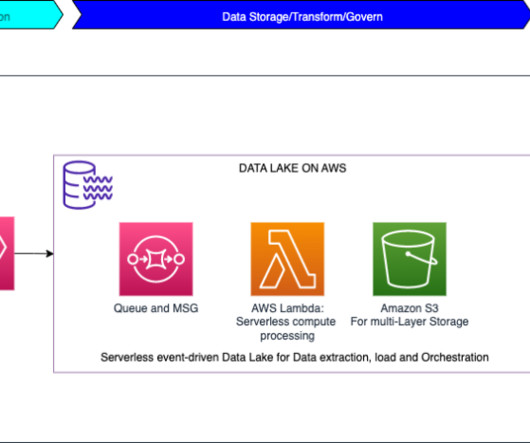

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

This site uses cookies to improve your experience. To help us insure we adhere to various privacy regulations, please select your country/region of residence. If you do not select a country, we will assume you are from the United States. Select your Cookie Settings or view our Privacy Policy and Terms of Use.

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

Used for the proper function of the website

Used for monitoring website traffic and interactions

Cookies and similar technologies are used on this website for proper function of the website, for tracking performance analytics and for marketing purposes. We and some of our third-party providers may use cookie data for various purposes. Please review the cookie settings below and choose your preference.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

Analytics Vidhya

MAY 26, 2023

Introduction Discover the ultimate guide to building a powerful data pipeline on AWS! In today’s data-driven world, organizations need efficient pipelines to collect, process, and leverage valuable data. With AWS, you can unleash the full potential of your data.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Analytics Vidhya

AUGUST 3, 2021

ArticleVideo Book This article was published as a part of the Data Science Blogathon Introduction Apache Spark is a framework used in cluster computing environments. The post Building a Data Pipeline with PySpark and AWS appeared first on Analytics Vidhya.

Analytics Vidhya

JANUARY 15, 2021

ArticleVideos I will admit, AWS Data Wrangler has become my go-to package for developing extract, transform, and load (ETL) data pipelines and other day-to-day. The post Using AWS Data Wrangler with AWS Glue Job 2.0 appeared first on Analytics Vidhya.

KDnuggets

NOVEMBER 18, 2021

This is the guide on how to build a serverless data pipeline on AWS with a Machine Learning model deployed as a Sagemaker endpoint.

AWS Machine Learning Blog

NOVEMBER 26, 2024

AWS AI chips, Trainium and Inferentia, enable you to build and deploy generative AI models at higher performance and lower cost. The Datadog dashboard offers a detailed view of your AWS AI chip (Trainium or Inferentia) performance, such as the number of instances, availability, and AWS Region.

Analytics Vidhya

APRIL 23, 2024

It offers a scalable and extensible solution for automating complex workflows, automating repetitive tasks, and monitoring data pipelines. This article explores the intricacies of automating ETL pipelines using Apache Airflow on AWS EC2.

Analytics Vidhya

FEBRUARY 19, 2023

Introduction Data pipelines play a critical role in the processing and management of data in modern organizations. A well-designed data pipeline can help organizations extract valuable insights from their data, automate tedious manual processes, and ensure the accuracy of data processing.

Analytics Vidhya

FEBRUARY 6, 2023

Introduction The demand for data to feed machine learning models, data science research, and time-sensitive insights is higher than ever thus, processing the data becomes complex. To make these processes efficient, data pipelines are necessary. appeared first on Analytics Vidhya.

Adrian Bridgwater for Forbes

NOVEMBER 28, 2023

Because data exists in so varied a set of structures and forms that we can do much with it - but, that means we need ways to connect all our data.

AWS Machine Learning Blog

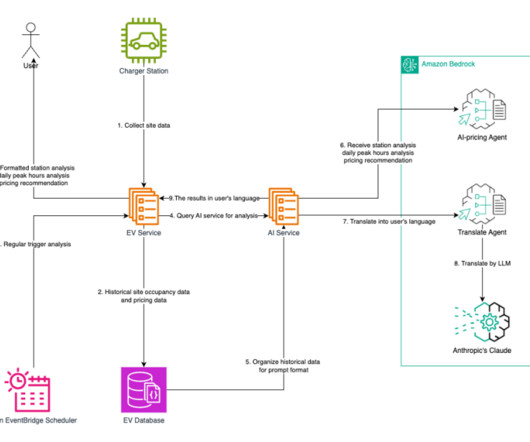

JANUARY 7, 2025

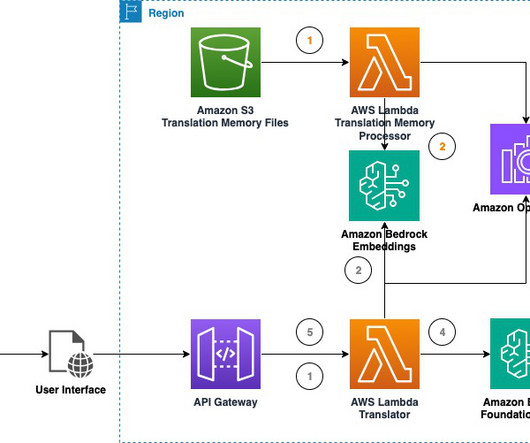

The translation playground could be adapted into a scalable serverless solution as represented by the following diagram using AWS Lambda , Amazon Simple Storage Service (Amazon S3), and Amazon API Gateway. To run the project code, make sure that you have fulfilled the AWS CDK prerequisites for Python.

MAY 30, 2025

Scaling and load balancing The gateway can handle load balancing across different servers, model instances, or AWS Regions so that applications remain responsive. The AWS Solutions Library offers solution guidance to set up a multi-provider generative AI gateway. Leave us a comment and we will be glad to collaborate.

AWS Machine Learning Blog

JANUARY 7, 2025

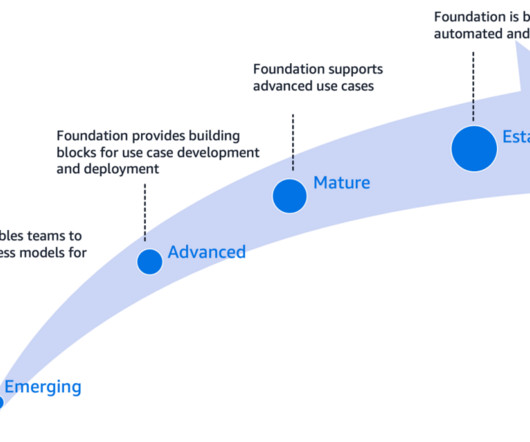

To address this need, AWS generative AI best practices framework was launched within AWS Audit Manager , enabling auditing and monitoring of generative AI applications. Figure 1 depicts the systems functionalities and AWS services. Select AWS Generative AI Best Practices Framework for assessment. Choose Create assessment.

AWS Machine Learning Blog

JANUARY 15, 2025

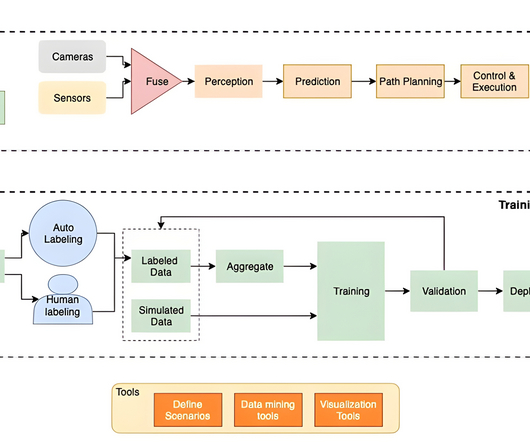

Powered by generative AI services on AWS and large language models (LLMs) multi-modal capabilities, HCLTechs AutoWise Companion provides a seamless and impactful experience. Technical architecture The overall solution is implemented using AWS services and LangChain. AWS Glue AWS Glue is used for data cataloging.

AWS Machine Learning Blog

APRIL 3, 2025

At the heart of this transformation is the OMRON Data & Analytics Platform (ODAP), an innovative initiative designed to revolutionize how the company harnesses its data assets. The robust security features provided by Amazon S3, including encryption and durability, were used to provide data protection.

How to Learn Machine Learning

DECEMBER 24, 2024

If you’re diving into the world of machine learning, AWS Machine Learning provides a robust and accessible platform to turn your data science dreams into reality. Whether you’re a solo developer or part of a large enterprise, AWS provides scalable solutions that grow with your needs. Hey dear reader!

NOVEMBER 27, 2024

While customers can perform some basic analysis within their operational or transactional databases, many still need to build custom data pipelines that use batch or streaming jobs to extract, transform, and load (ETL) data into their data warehouse for more comprehensive analysis. Create dbt models in dbt Cloud.

AWS Machine Learning Blog

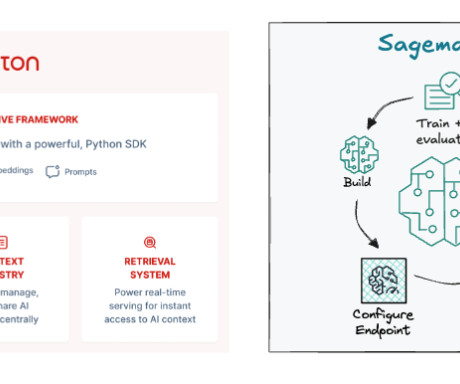

MARCH 5, 2025

By the end of this journey, you will be equipped to streamline your development process and apply Chronos to any time series data, transforming your forecasting approach. Click here to open the AWS console and follow along. Overview of SageMaker Pipelines We use SageMaker Pipelines to orchestrate training and evaluation experiments.

NOVEMBER 7, 2023

“Data is at the center of every application, process, and business decision,” wrote Swami Sivasubramanian, VP of Database, Analytics, and Machine Learning at AWS, and I couldn’t agree more. A common pattern customers use today is to build data pipelines to move data from Amazon Aurora to Amazon Redshift.

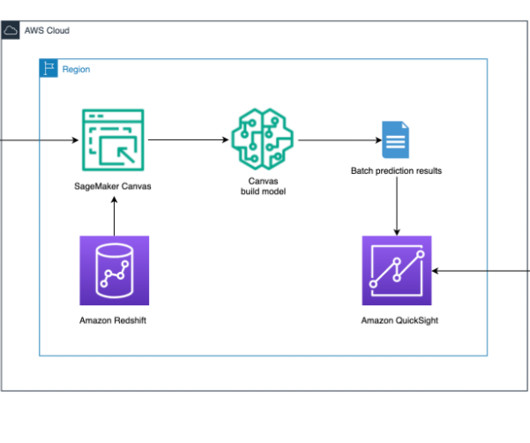

AWS Machine Learning Blog

OCTOBER 24, 2024

Prerequisites Before you begin, make sure you have the following prerequisites in place: An AWS account and role with the AWS Identity and Access Management (IAM) privileges to deploy the following resources: IAM roles. A provisioned or serverless Amazon Redshift data warehouse. Choose Create stack. Sohaib Katariwala is a Sr.

AWS Machine Learning Blog

FEBRUARY 21, 2025

Lets assume that the question What date will AWS re:invent 2024 occur? The corresponding answer is also input as AWS re:Invent 2024 takes place on December 26, 2024. If the question was Whats the schedule for AWS events in December?, This setup uses the AWS SDK for Python (Boto3) to interact with AWS services.

AWS Machine Learning Blog

DECEMBER 4, 2024

It seems straightforward at first for batch data, but the engineering gets even more complicated when you need to go from batch data to incorporating real-time and streaming data sources, and from batch inference to real-time serving. You can also find Tecton at AWS re:Invent.

AWS Machine Learning Blog

OCTOBER 24, 2024

Amazon Bedrock Agents is instrumental in customization and tailoring apps to help meet specific project requirements while protecting private data and securing their applications. These agents work with AWS managed infrastructure capabilities and Amazon Bedrock , reducing infrastructure management overhead.

AWS Machine Learning Blog

DECEMBER 4, 2024

SageMaker Unified Studio combines various AWS services, including Amazon Bedrock , Amazon SageMaker , Amazon Redshift , Amazon Glue , Amazon Athena , and Amazon Managed Workflows for Apache Airflow (MWAA) , into a comprehensive data and AI development platform. Navigate to the AWS Secrets Manager console and find the secret -api-keys.

JUNE 3, 2025

In the following section, we dive deep into these steps and the AWS services used. They needed a solution that could support rapid expansion, handle high data volumes, and deliver consistent performance across AWS Regions. About the Authors Ray Wang is a Senior Solutions Architect at AWS.

AWS Machine Learning Blog

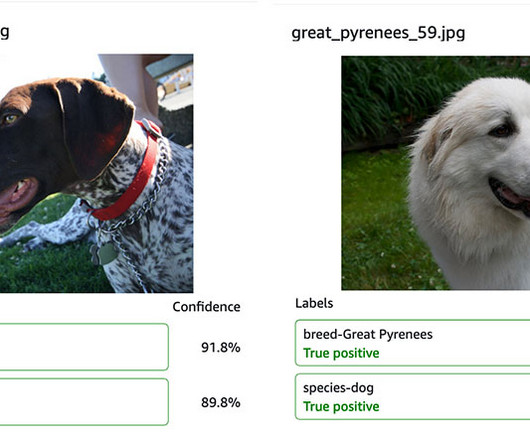

OCTOBER 18, 2023

This post details how Purina used Amazon Rekognition Custom Labels , AWS Step Functions , and other AWS Services to create an ML model that detects the pet breed from an uploaded image and then uses the prediction to auto-populate the pet attributes. AWS CodeBuild is a fully managed continuous integration service in the cloud.

Adrian Bridgwater for Forbes

DECEMBER 1, 2022

What businesses need from cloud computing is the power to work on their data without having to transport it around between different clouds, different databases and different repositories, different integrations to third-party applications, different data pipelines and different compute engines.

Data Science Dojo

JULY 6, 2023

Data engineering tools are software applications or frameworks specifically designed to facilitate the process of managing, processing, and transforming large volumes of data. Amazon Redshift: Amazon Redshift is a cloud-based data warehousing service provided by Amazon Web Services (AWS).

IBM Journey to AI blog

MAY 15, 2024

Data engineers build data pipelines, which are called data integration tasks or jobs, as incremental steps to perform data operations and orchestrate these data pipelines in an overall workflow. Organizations can harness the full potential of their data while reducing risk and lowering costs.

AWS Machine Learning Blog

SEPTEMBER 18, 2024

In addition to its groundbreaking AI innovations, Zeta Global has harnessed Amazon Elastic Container Service (Amazon ECS) with AWS Fargate to deploy a multitude of smaller models efficiently. It simplifies feature access for model training and inference, significantly reducing the time and complexity involved in managing data pipelines.

AWS Machine Learning Blog

FEBRUARY 23, 2023

For more information about distributed training with SageMaker, refer to the AWS re:Invent 2020 video Fast training and near-linear scaling with DataParallel in Amazon SageMaker and The science behind Amazon SageMaker’s distributed-training engines. In a later post, we will do a deep dive into the DNNs used by ADAS systems.

AWS Machine Learning Blog

MARCH 1, 2023

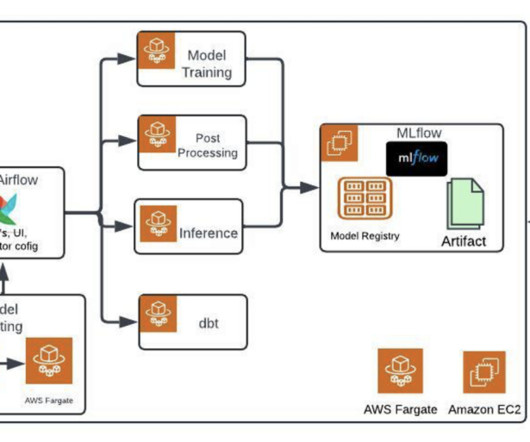

In this post, we share how Kakao Games and the Amazon Machine Learning Solutions Lab teamed up to build a scalable and reliable LTV prediction solution by using AWS data and ML services such as AWS Glue and Amazon SageMaker. The ETL pipeline, MLOps pipeline, and ML inference should be rebuilt in a different AWS account.

AWS Machine Learning Blog

DECEMBER 18, 2024

Training an LLM is a compute-intensive and complex process, which is why Fastweb, as a first step in their AI journey, used AWS generative AI and machine learning (ML) services such as Amazon SageMaker HyperPod. The team opted for fine-tuning on AWS.

MAY 21, 2025

This post highlights how the UBC CIC uses Amazon Web Services (AWS) to accelerate generative AI development, sharing lessons learned, tools used, and actionable insights you can apply to your projects. Security in generative AI prototyping UBC CIC observes the shared responsibility model through the Amazon Bedrock Data protection features.

AWS Machine Learning Blog

FEBRUARY 1, 2024

Consider the following picture, which is an AWS view of the a16z emerging application stack for large language models (LLMs). This pipeline could be a batch pipeline if you prepare contextual data in advance, or a low-latency pipeline if you’re incorporating new contextual data on the fly.

Data Science Dojo

FEBRUARY 20, 2023

Spark is well suited to applications that involve large volumes of data, real-time computing, model optimization, and deployment. Read about Apache Zeppelin: Magnum Opus of MLOps in detail AWS SageMaker AWS SageMaker is an AI service that allows developers to build, train and manage AI models.

The MLOps Blog

MAY 17, 2023

We also discuss different types of ETL pipelines for ML use cases and provide real-world examples of their use to help data engineers choose the right one. What is an ETL data pipeline in ML? Xoriant It is common to use ETL data pipeline and data pipeline interchangeably.

AWS Machine Learning Blog

OCTOBER 23, 2024

SnapLogic uses Amazon Bedrock to build its platform, capitalizing on the proximity to data already stored in Amazon Web Services (AWS). Control plane and data plane implementation SnapLogic’s Agent Creator platform follows a decoupled architecture, separating the control plane and data plane for enhanced security and scalability.

Precisely

APRIL 11, 2024

In an era where cloud technology is not just an option but a necessity for competitive business operations, the collaboration between Precisely and Amazon Web Services (AWS) has set a new benchmark for mainframe and IBM i modernization. Solution page Precisely on Amazon Web Services (AWS) Precisely brings data integrity to the AWS cloud.

phData

AUGUST 6, 2024

As today’s world keeps progressing towards data-driven decisions, organizations must have quality data created from efficient and effective data pipelines. For customers in Snowflake, Snowpark is a powerful tool for building these effective and scalable data pipelines.

Pickl AI

DECEMBER 26, 2024

Each platform offers unique capabilities tailored to varying needs, making the platform a critical decision for any Data Science project. Major Cloud Platforms for Data Science Amazon Web Services ( AWS ), Microsoft Azure, and Google Cloud Platform (GCP) dominate the cloud market with their comprehensive offerings.

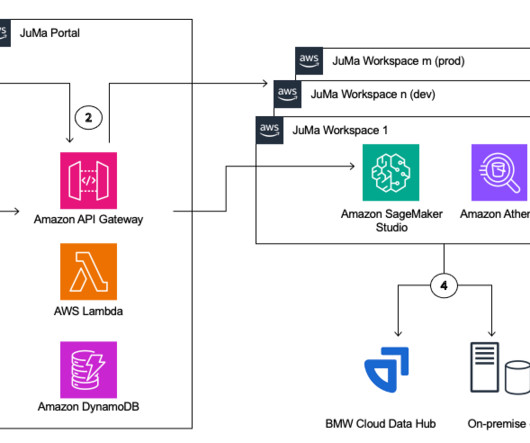

NOVEMBER 24, 2023

In this post, we will talk about how BMW Group, in collaboration with AWS Professional Services, built its Jupyter Managed (JuMa) service to address these challenges. For example, teams using these platforms missed an easy migration of their AI/ML prototypes to the industrialization of the solution running on AWS.

AWS Machine Learning Blog

FEBRUARY 5, 2025

The following diagram illustrates the data pipeline for indexing and query in the foundational search architecture. The listing indexer AWS Lambda function continuously polls the queue and processes incoming listing updates. He has specialization in data strategy, machine learning and Generative AI.

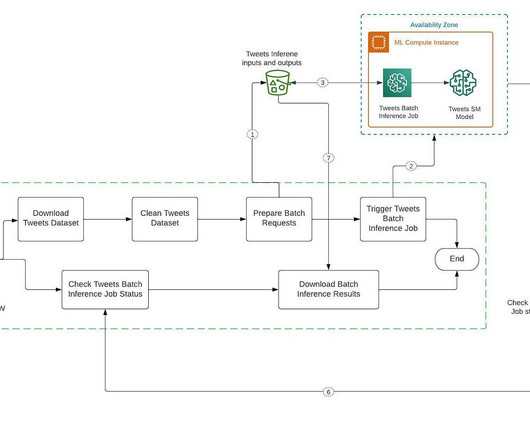

Mlearning.ai

APRIL 6, 2023

Automate and streamline our ML inference pipeline with SageMaker and Airflow Building an inference data pipeline on large datasets is a challenge many companies face. We use DAG (Directed Acyclic Graph) in Airflow, DAGs describe how to run a workflow by defining the pipeline in Python, that is configuration as code.

Expert insights. Personalized for you.

We have resent the email to

Are you sure you want to cancel your subscriptions?

Let's personalize your content