Discover the Most Important Fundamentals of Data Engineering

Pickl AI

NOVEMBER 4, 2024

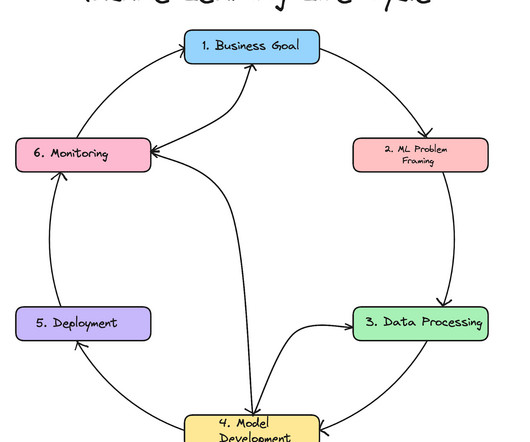

Key Takeaways Data Engineering is vital for transforming raw data into actionable insights. Key components include data modelling, warehousing, pipelines, and integration. Effective data governance enhances quality and security throughout the data lifecycle. What is Data Engineering?

Let's personalize your content