Modern NLP: A Detailed Overview. Part 2: GPTs

Towards AI

JULY 23, 2023

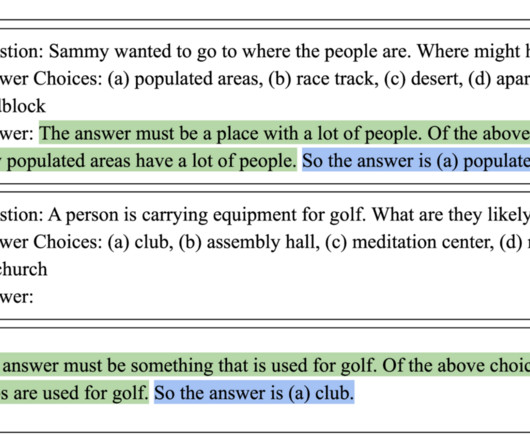

Last Updated on July 25, 2023 by Editorial Team Author(s): Abhijit Roy Originally published on Towards AI. Semi-Supervised Sequence Learning As we all know, supervised learning has a drawback, as it requires a huge labeled dataset to train. In 2015, Andrew M. At this point, datasets like […]

Let's personalize your content