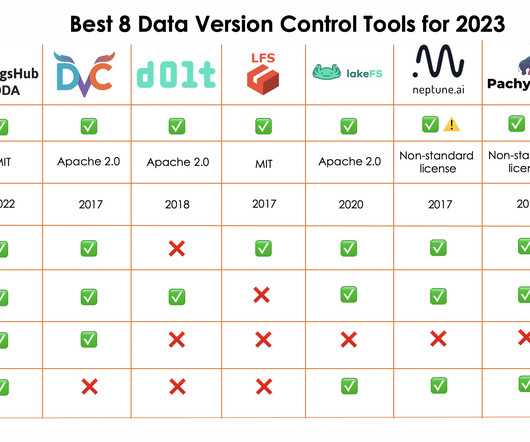

Best 8 Data Version Control Tools for Machine Learning 2024

DagsHub

DECEMBER 11, 2023

Released in 2022, DagsHub’s Direct Data Access (DDA for short) allows Data Scientists and Machine Learning engineers to stream files from DagsHub repository without needing to download them to their local environment ahead of time. This can prevent lengthy data downloads to the local disks before initiating their mode training.

Let's personalize your content