Big Data vs. Data Science: Demystifying the Buzzwords

Pickl AI

APRIL 21, 2025

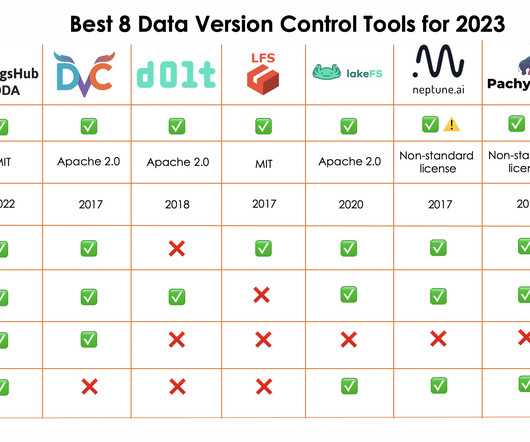

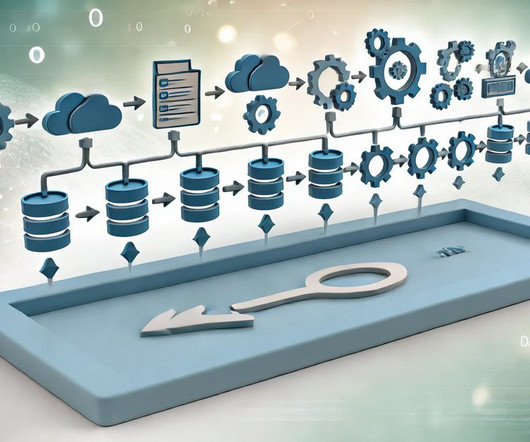

Key Takeaways Big Data focuses on collecting, storing, and managing massive datasets. Data Science extracts insights and builds predictive models from processed data. Big Data technologies include Hadoop, Spark, and NoSQL databases. Data Science uses Python, R, and machine learning frameworks.

Let's personalize your content