Build a domain‐aware data preprocessing pipeline: A multi‐agent collaboration approach

MAY 20, 2025

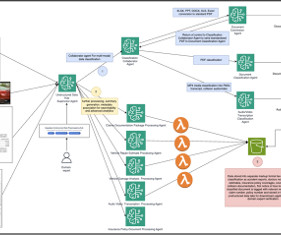

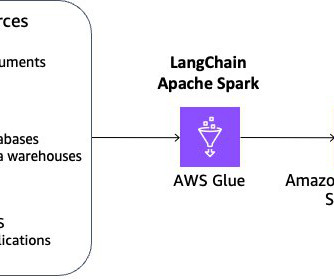

The end-to-end workflow features a supervisor agent at the center, classification and conversion agents branching off, a humanintheloop step, and Amazon Simple Storage Service (Amazon S3) as the final unstructured data lake destination. Make sure that every incoming data eventually lands, along with its metadata, in the S3 data lake.

Let's personalize your content