Data Integrity Trends for 2023

Precisely

JANUARY 17, 2023

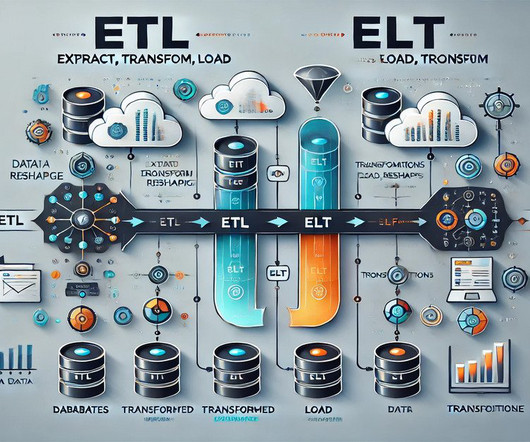

Data Volume, Variety, and Velocity Raise the Bar Corporate IT landscapes are larger and more complex than ever. Cloud computing offers some advantages in terms of scalability and elasticity, yet it has also led to higher-than-ever volumes of data. That approach assumes that good data quality will be self-sustaining.

Let's personalize your content