Build Data Pipelines: Comprehensive Step-by-Step Guide

Pickl AI

JULY 8, 2024

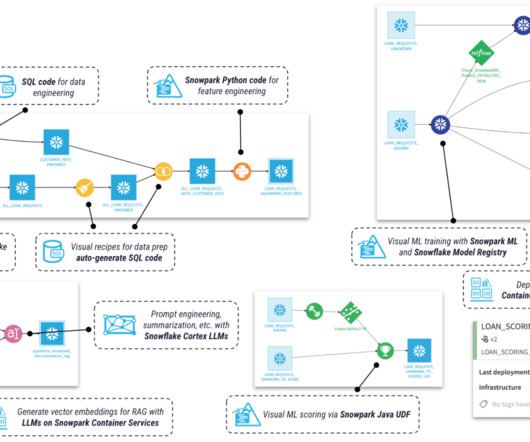

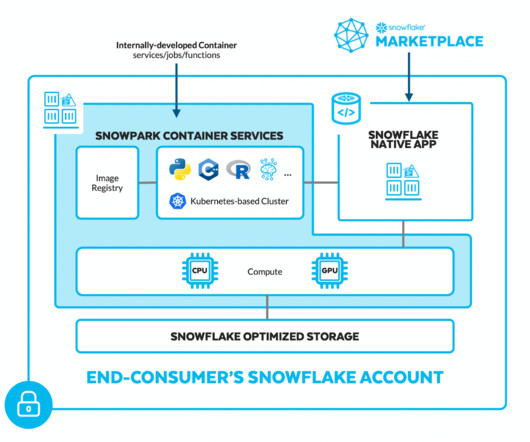

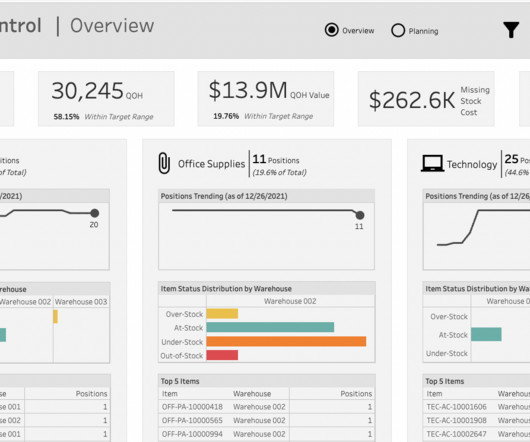

Summary: This blog explains how to build efficient data pipelines, detailing each step from data collection to final delivery. Introduction Data pipelines play a pivotal role in modern data architecture by seamlessly transporting and transforming raw data into valuable insights.

Let's personalize your content