Data integration

Dataconomy

JUNE 18, 2025

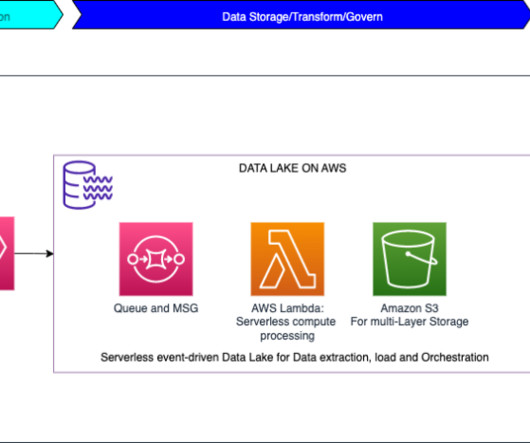

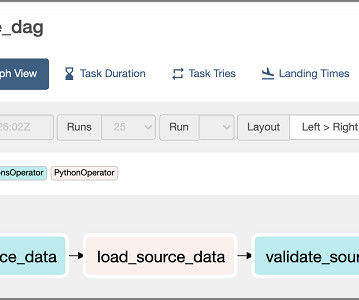

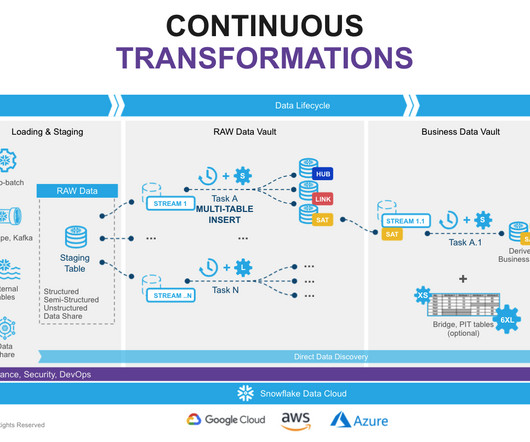

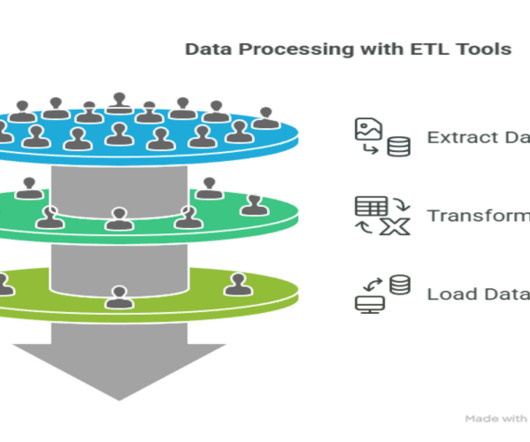

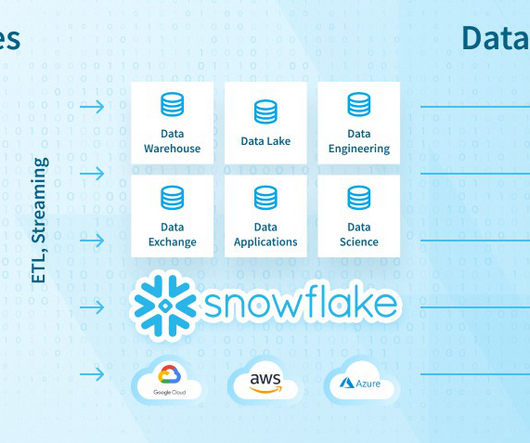

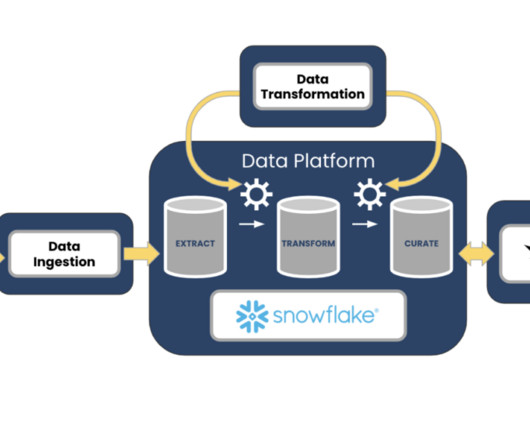

Feeding data for analytics Integrated data is essential for populating data warehouses, data lakes, and lakehouses, ensuring that analysts have access to complete datasets for their work. Best practices for data integration Implementing best practices ensures successful data integration outcomes.

Let's personalize your content