Acceleration Unlocked: DS3_v2 Instance Types on Azure now supported by Photon

databricks

MAY 1, 2023

At Databricks, we offer maximal flexibility for choosing compute for ETL and ML/AI workloads. Staying true to the theme of flexibility, we announce.

databricks

MAY 1, 2023

At Databricks, we offer maximal flexibility for choosing compute for ETL and ML/AI workloads. Staying true to the theme of flexibility, we announce.

databricks

JUNE 11, 2025

It eliminates fragile ETL pipelines and complex infrastructure, enabling teams to move faster and deliver intelligent applications on a unified data platform In this blog, we propose a new architecture for OLTP databases called a lakebase. Deeply integrated with the lakehouse, Lakebase simplifies operational data workflows.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

databricks

JUNE 11, 2025

" — James Lin, Head of AI ML Innovation, Experian The Path Forward: From Lab to Production in Days, Not Months Early customers are already experiencing the transformation Agent Bricks delivers – accuracy improvements that double performance benchmarks and reduce development timelines from weeks to a single day.

databricks

JUNE 11, 2025

Bring your real-time online ML workloads to Databricks, and let us handle the infrastructure and reliability challenges so you can focus on the AI model development. Our enhanced Model Serving infrastructure now supports over 250,000 queries per second (QPS).

databricks

JUNE 12, 2025

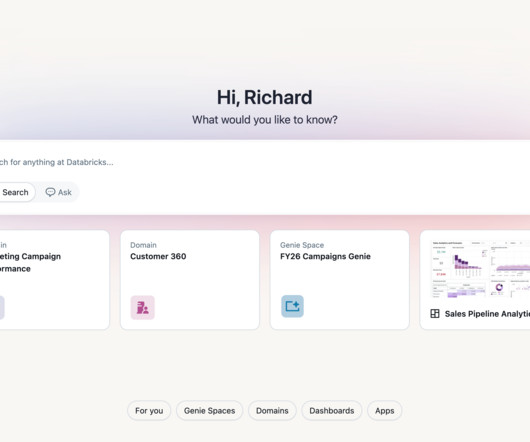

Skip to main content Login Why Databricks Discover For Executives For Startups Lakehouse Architecture Mosaic Research Customers Customer Stories Partners Cloud Providers Databricks on AWS, Azure, GCP, and SAP Consulting & System Integrators Experts to build, deploy and migrate to Databricks Technology Partners Connect your existing tools to your (..)

Data Science Dojo

OCTOBER 31, 2024

Applied Machine Learning Scientist Description : Applied ML Scientists focus on translating algorithms into scalable, real-world applications. Demand for applied ML scientists remains high, as more companies focus on AI-driven solutions for scalability.

Data Science Dojo

FEBRUARY 20, 2023

Machine learning (ML) is the technology that automates tasks and provides insights. It comes in many forms, with a range of tools and platforms designed to make working with ML more efficient. It features an ML package with machine learning-specific APIs that enable the easy creation of ML models, training, and deployment.

Let's personalize your content