Reduce ML training costs with Amazon SageMaker HyperPod

AWS Machine Learning Blog

APRIL 10, 2025

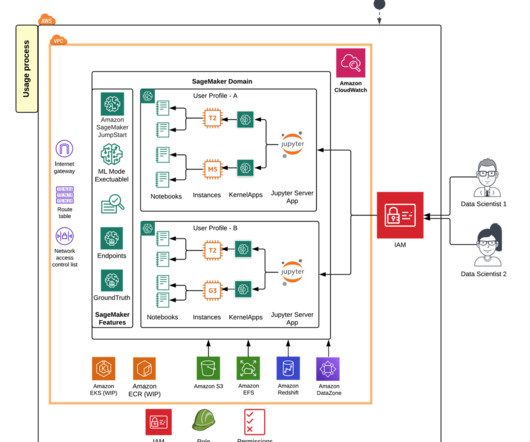

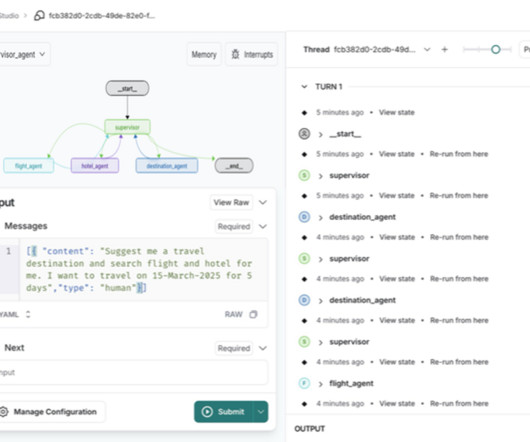

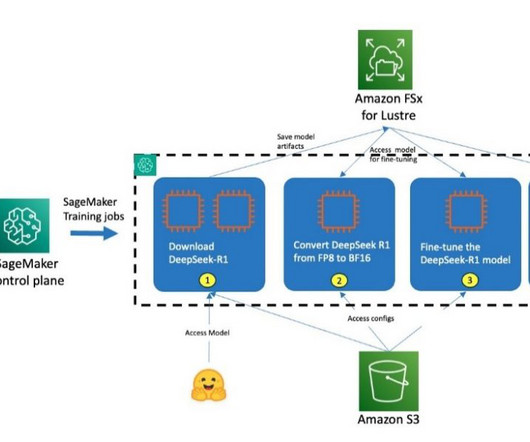

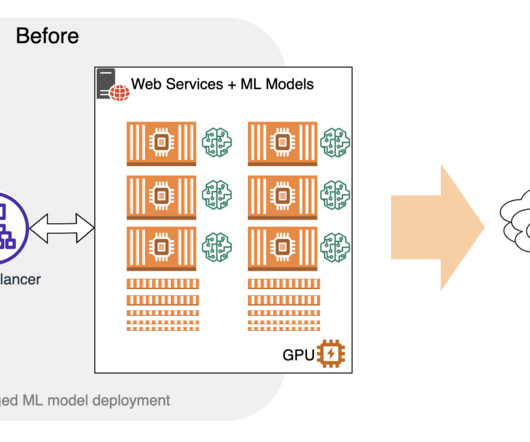

This means users can build resilient clusters for machine learning (ML) workloads and develop or fine-tune state-of-the-art frontier models, as demonstrated by organizations such as Luma Labs and Perplexity AI. Frontier model builders can further enhance model performance using built-in ML tools within SageMaker HyperPod.

Let's personalize your content