Maximizing your event-driven architecture investments: Unleashing the power of Apache Kafka with IBM Event Automation

IBM Journey to AI blog

FEBRUARY 12, 2024

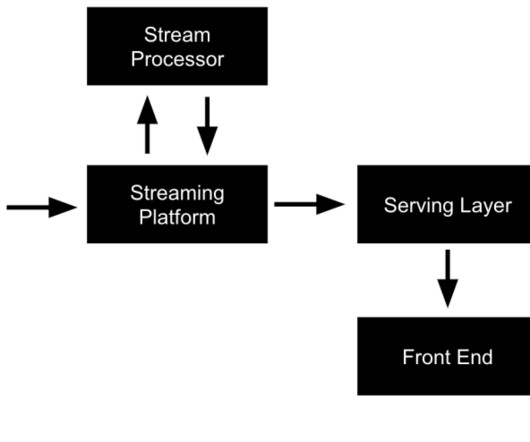

At the forefront of this event-driven revolution is Apache Kafka, the widely recognized and dominant open-source technology for event streaming. While most enterprises have already recognized how Apache Kafka provides a strong foundation for EDA, they often fall behind in unlocking its true potential.

Let's personalize your content