How to Build a Scalable Data Architecture with Apache Kafka

KDnuggets

APRIL 5, 2023

Learn about Apache Kafka architecture and its implementation using a real-world use case of a taxi booking app.

KDnuggets

APRIL 5, 2023

Learn about Apache Kafka architecture and its implementation using a real-world use case of a taxi booking app.

JUNE 3, 2025

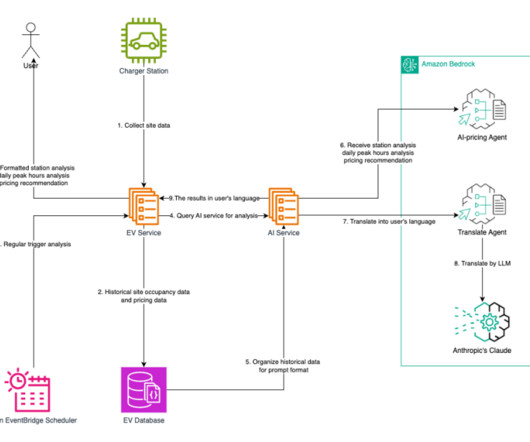

The data is then transmitted to Amazon Managed Streaming for Apache Kafka (Amazon MSK) to facilitate high-throughput, reliable streaming. As a perpetual learner, hes doing research in Visual Language Model, Responsible AI & Computer Vision and authoring a book in ML engineering.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

Precisely

SEPTEMBER 12, 2023

Precisely data integrity solutions fuel your Confluent and Apache Kafka streaming data pipelines with trusted data that has maximum accuracy, consistency, and context and we’re ready to share more with you at the upcoming Current 2023. Book your meeting with us at Confluent’s Current 2023. See you in San Jose!

ODSC - Open Data Science

MAY 24, 2023

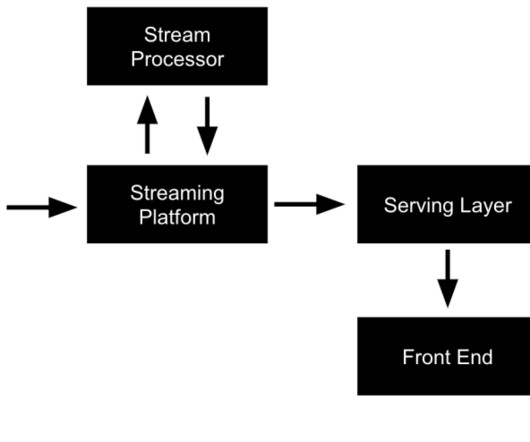

Streaming Machine Learning Without a Data Lake The combination of data streaming and ML enables you to build one scalable, reliable, but also simple infrastructure for all machine learning tasks using the Apache Kafka ecosystem. Here’s why.

phData

SEPTEMBER 27, 2024

If transitional modeling is like building with Legos, then activity schema modeling is like creating a flip book animation of your customer’s journey. Technologies like Apache Kafka, often used in modern CDPs, use log-based approaches to stream customer events between systems in real-time. What is Activity Schema Modeling?

Let's personalize your content