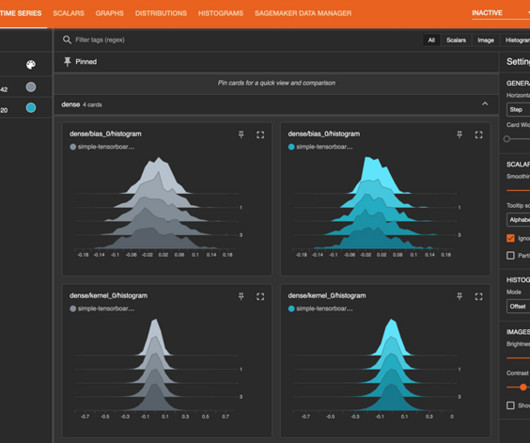

Enhanced observability for AWS Trainium and AWS Inferentia with Datadog

AWS Machine Learning Blog

NOVEMBER 26, 2024

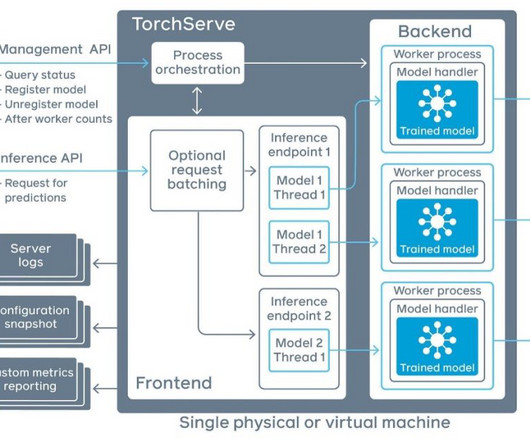

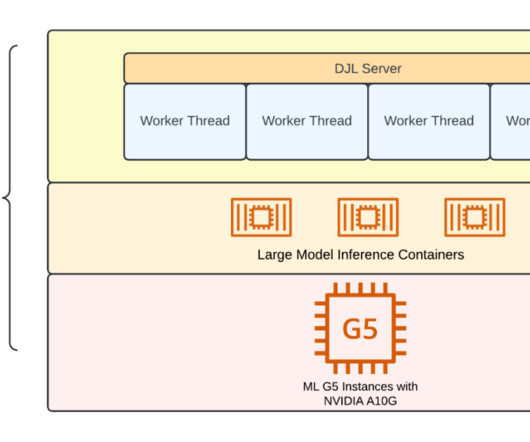

Neuron is the SDK used to run deep learning workloads on Trainium and Inferentia based instances. AWS AI chips, Trainium and Inferentia, enable you to build and deploy generative AI models at higher performance and lower cost. To get started, see AWS Inferentia and AWS Trainium Monitoring.

Let's personalize your content