Common Machine Learning Obstacles

KDnuggets

SEPTEMBER 9, 2019

In this blog, Seth DeLand of MathWorks discusses two of the most common obstacles relate to choosing the right classification model and eliminating data overfitting.

KDnuggets

SEPTEMBER 9, 2019

In this blog, Seth DeLand of MathWorks discusses two of the most common obstacles relate to choosing the right classification model and eliminating data overfitting.

IBM Journey to AI blog

APRIL 13, 2023

After developing a machine learning model, you need a place to run your model and serve predictions. Someone with the knowledge of SQL and access to a Db2 instance, where the in-database ML feature is enabled, can easily learn to build and use a machine learning model in the database.

This site is protected by reCAPTCHA and the Google Privacy Policy and Terms of Service apply.

JUNE 3, 2025

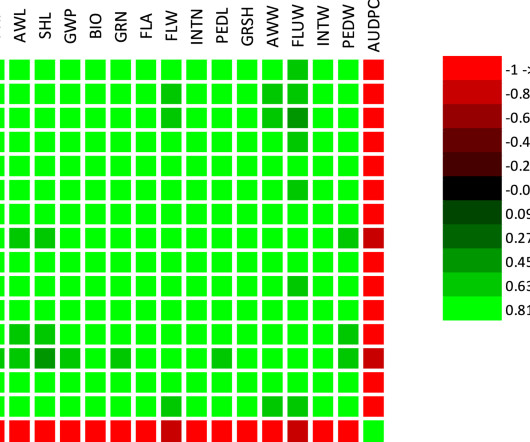

By employing machine-learning models, this study utilizes agronomical and molecular features to predict powdery mildew disease resistance in Barley (Hordeum Vulgare L). Subsequently, Decision Tree, Random Forest, Neural Network, and Gaussian Process Regression models were compared using MAE, RMSE, and R2 metrics.

Heartbeat

SEPTEMBER 13, 2023

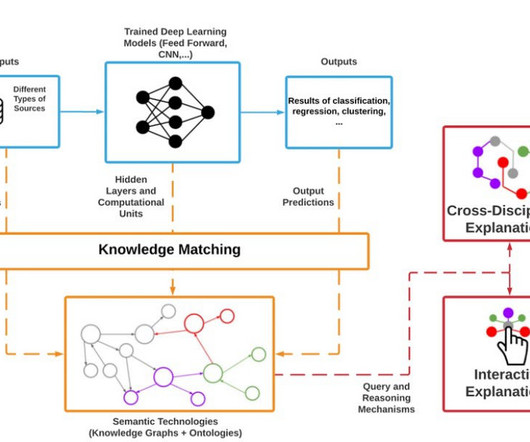

This guide will buttress explainability in machine learning and AI systems. The explainability concept involves providing insights into the decisions and predictions made by artificial intelligence (AI) systems and machine learning models. What is Explainability?

AWS Machine Learning Blog

SEPTEMBER 10, 2024

As part of its goal to help people live longer, healthier lives, Genomics England is interested in facilitating more accurate identification of cancer subtypes and severity, using machine learning (ML). Cemre holds a PhD in theoretical machine learning and postdoctoral experiences on machine learning for computer vision and healthcare.

Data Science Dojo

AUGUST 16, 2023

2018: Transformer models achieve state-of-the-art results on a wide range of NLP tasks, including machine translation, text summarization, and question answering. 2019: Transformers are used to create large language models (LLMs) such as BERT and GPT-2. 2020: LLMs are used to create even more powerful models such as GPT-3.

Data Science Dojo

AUGUST 16, 2023

2018: Transformer models achieve state-of-the-art results on a wide range of NLP tasks, including machine translation, text summarization, and question answering. 2019: Transformers are used to create large language models (LLMs) such as BERT and GPT-2. 2020: LLMs are used to create even more powerful models such as GPT-3.

Let's personalize your content