Generative vs Discriminative AI: Understanding the 5 Key Differences

Data Science Dojo

MAY 27, 2024

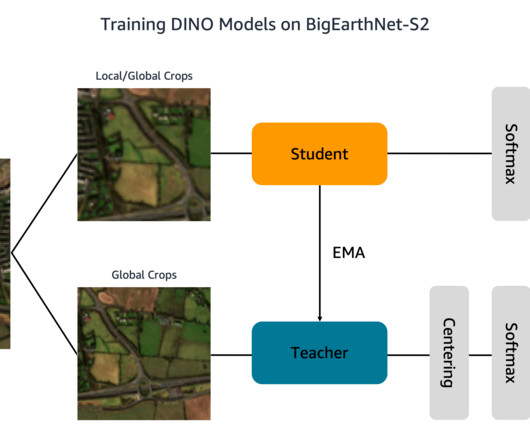

A visual representation of discriminative AI – Source: Analytics Vidhya Discriminative modeling, often linked with supervised learning, works on categorizing existing data. Generative AI often operates in unsupervised or semi-supervised learning settings, generating new data points based on patterns learned from existing data.

Let's personalize your content