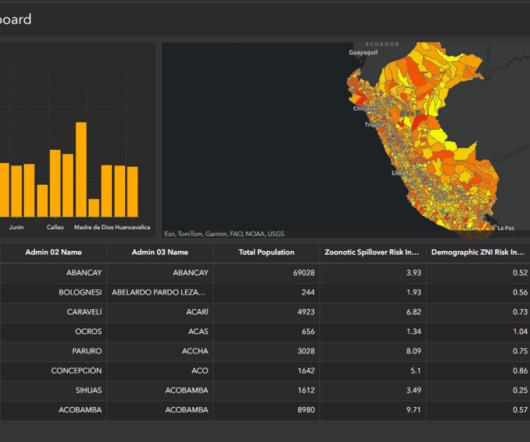

Revolutionizing earth observation with geospatial foundation models on AWS

MAY 29, 2025

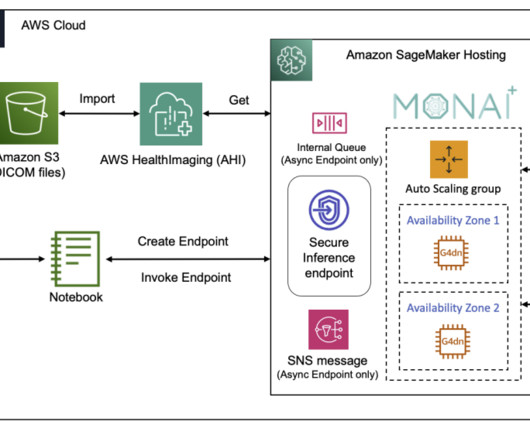

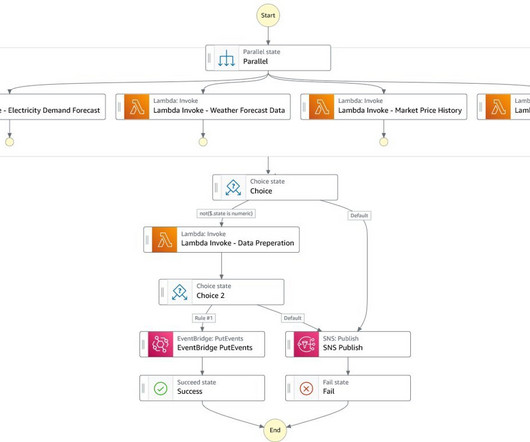

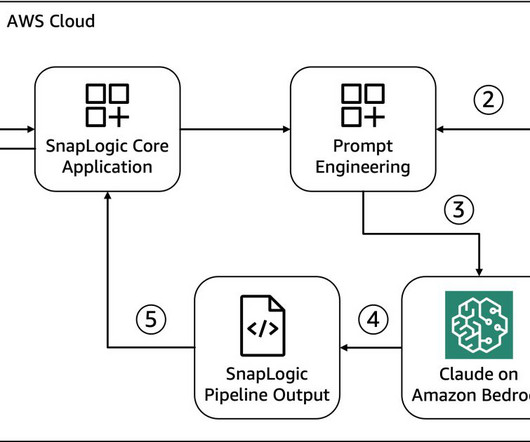

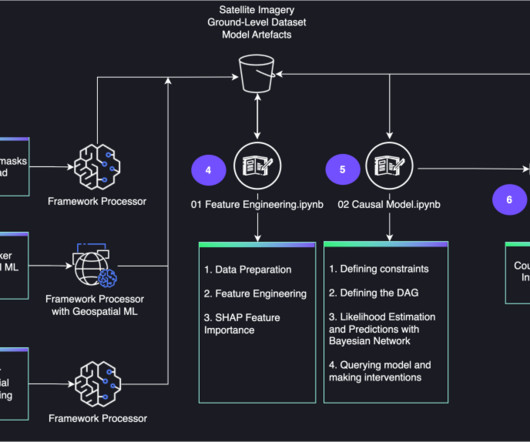

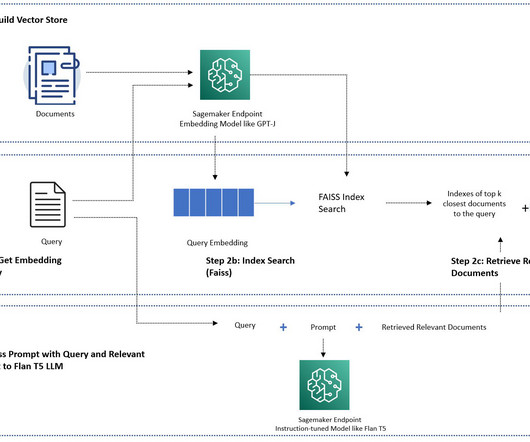

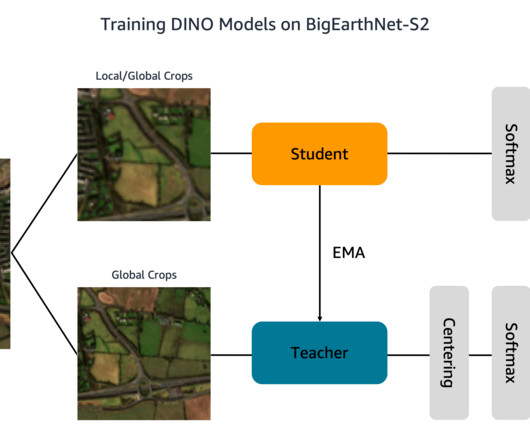

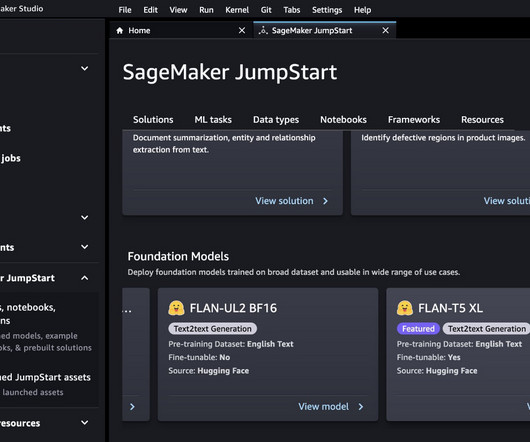

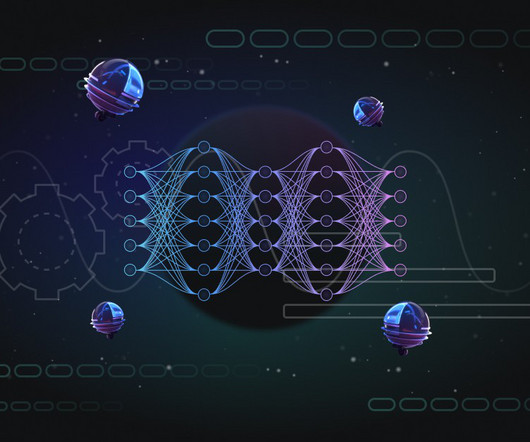

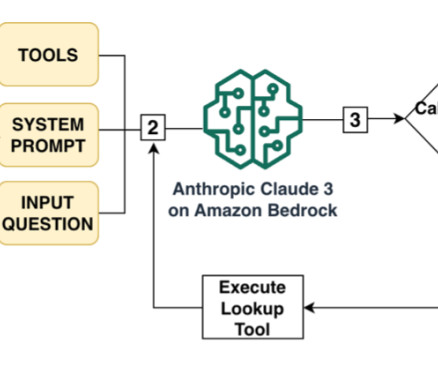

It also comes with ready-to-deploy code samples to help you get started quickly with deploying GeoFMs in your own applications on AWS. For a full architecture diagram demonstrating how the flow can be implemented on AWS, see the accompanying GitHub repository. Lets dive in! Solution overview At the core of our solution is a GeoFM.

Let's personalize your content