Build an AI-powered document processing platform with open source NER model and LLM on Amazon SageMaker

APRIL 23, 2025

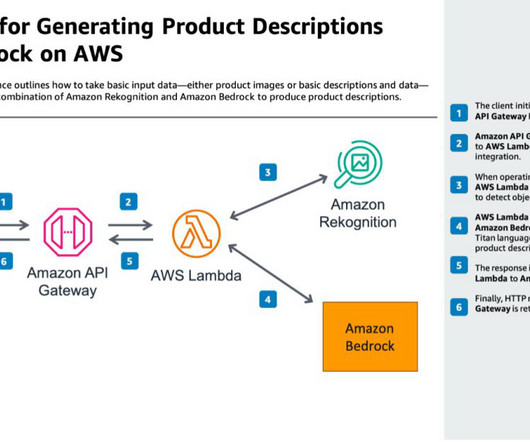

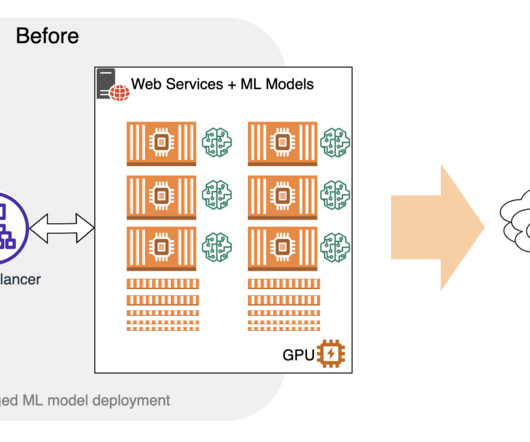

Designed with a serverless, cost-optimized architecture, the platform provisions SageMaker endpoints dynamically, providing efficient resource utilization while maintaining scalability. The decoupled nature of the endpoints also provides flexibility to update or replace individual models without impacting the broader system architecture.

Let's personalize your content