Feature scaling: A way to elevate data potential

Data Science Dojo

FEBRUARY 14, 2024

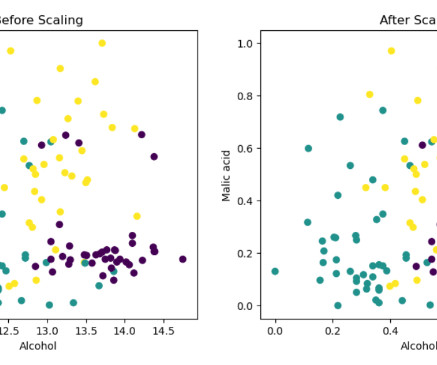

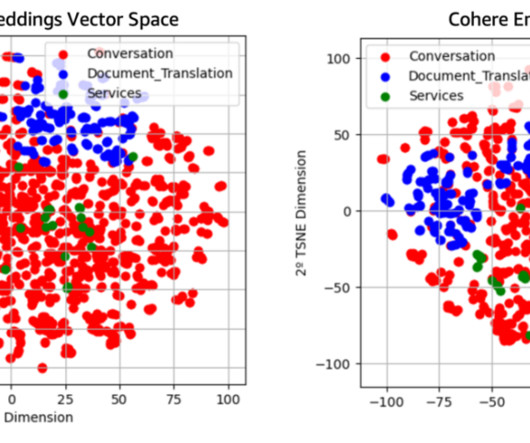

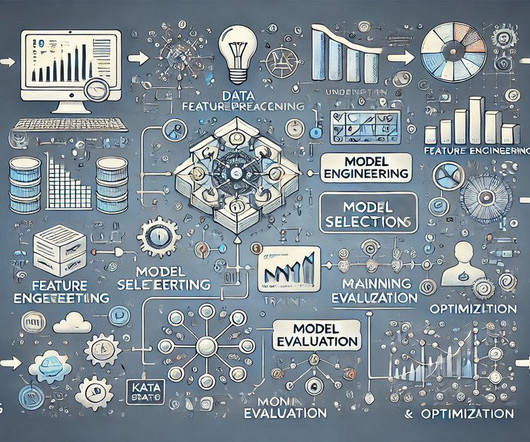

In the world of data science and machine learning, feature transformation plays a crucial role in achieving accurate and reliable results. By manipulating the input features of a dataset, we can enhance their quality, extract meaningful information, and improve the performance of predictive models.

Let's personalize your content