Data Abstraction for Data Engineering with its Different Levels

Analytics Vidhya

OCTOBER 10, 2022

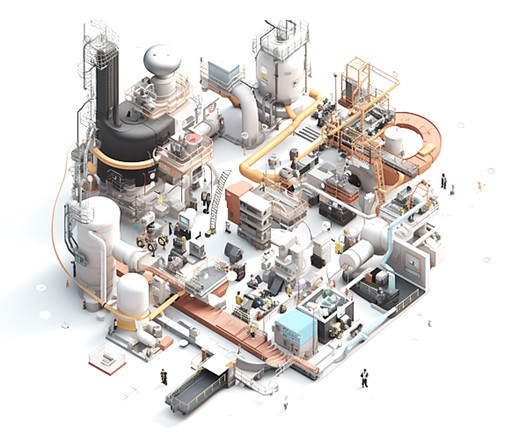

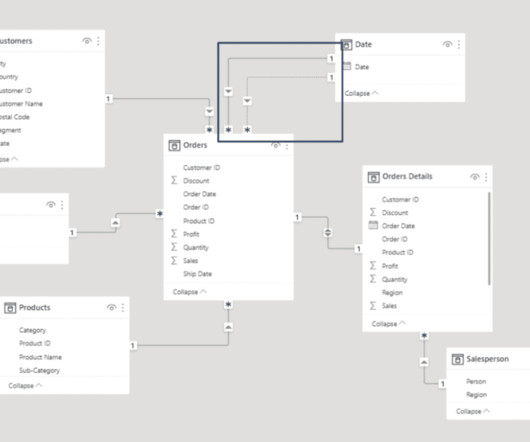

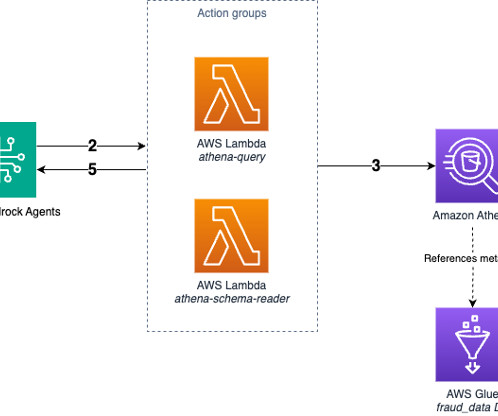

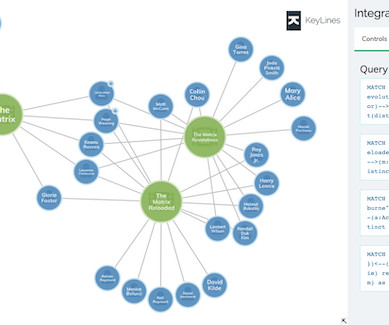

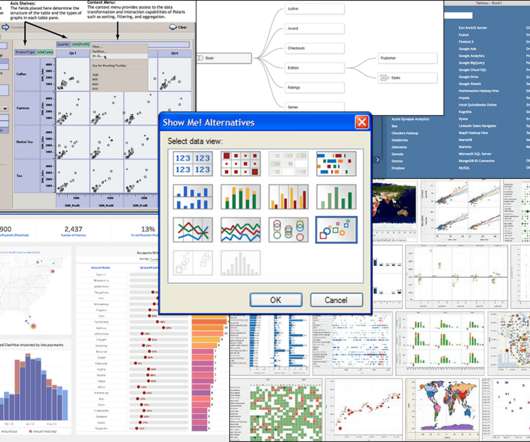

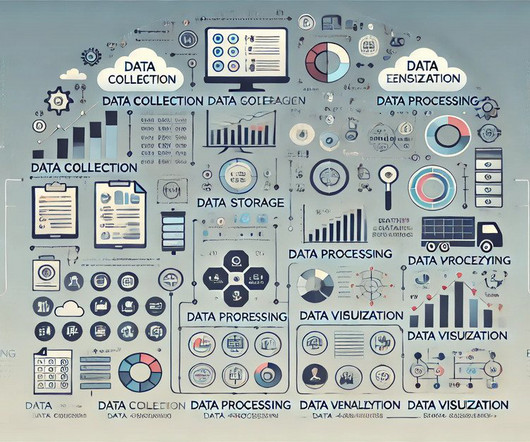

This article was published as a part of the Data Science Blogathon. Introduction A data model is an abstraction of real-world events that we use to create, capture, and store data in a database that user applications require, omitting unnecessary details.

Let's personalize your content