Essential data engineering tools for 2023: Empowering for management and analysis

Data Science Dojo

JULY 6, 2023

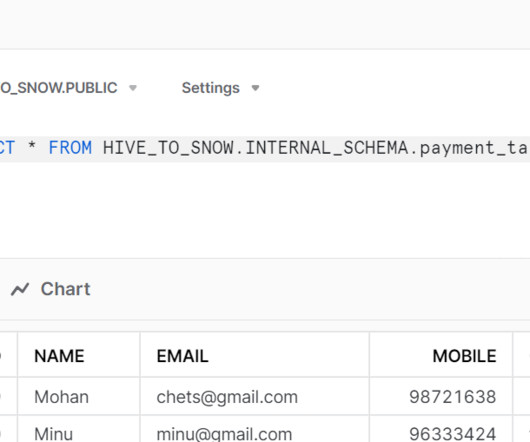

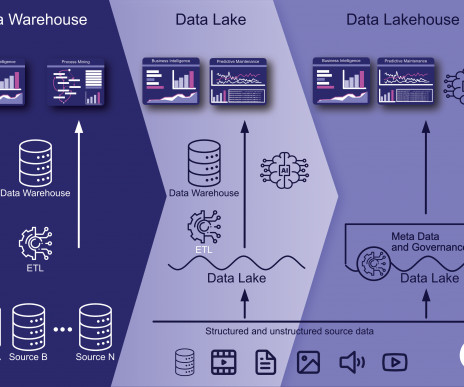

Data engineering tools are software applications or frameworks specifically designed to facilitate the process of managing, processing, and transforming large volumes of data. Essential data engineering tools for 2023 Top 10 data engineering tools to watch out for in 2023 1.

Let's personalize your content