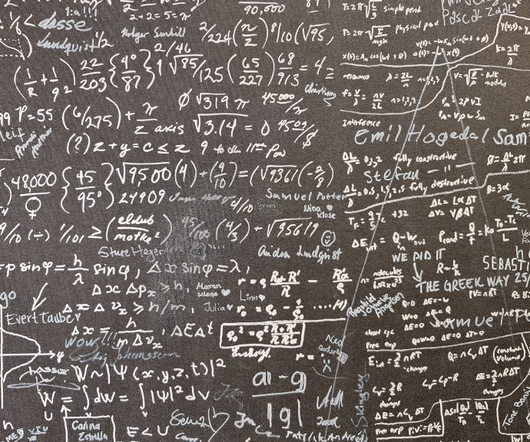

The K-Nearest Neighbors Algorithm Math Foundations: Hyperplanes, Voronoi Diagrams and Spacial…

Mlearning.ai

MARCH 1, 2023

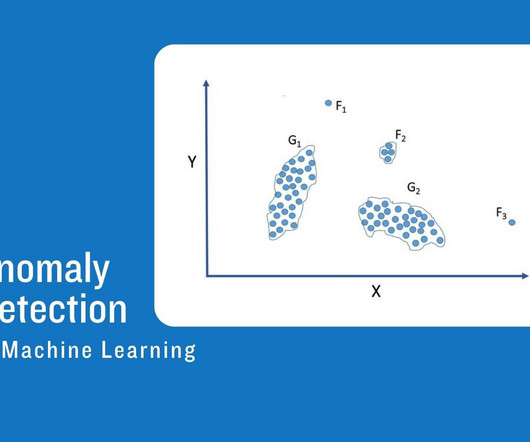

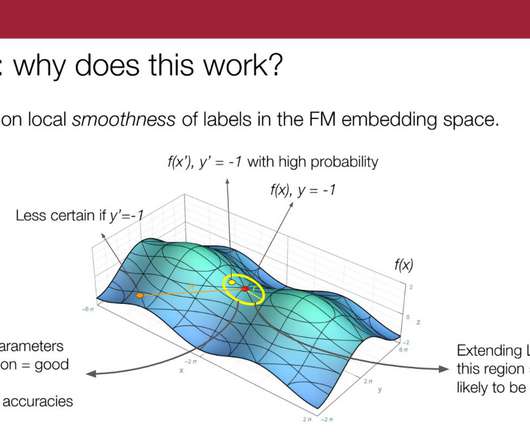

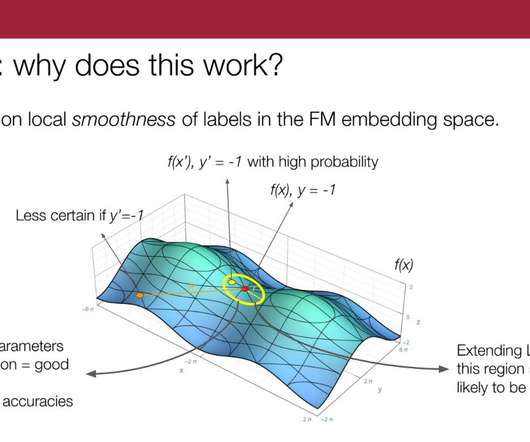

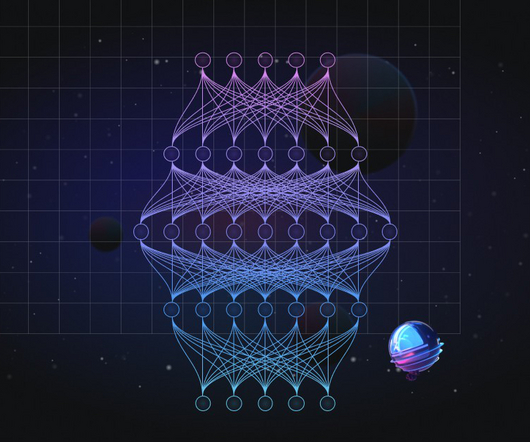

The K-Nearest Neighbors Algorithm Math Foundations: Hyperplanes, Voronoi Diagrams and Spacial Metrics. Diagram 1 Phenoms and 57s are both clustered around their respective centroids. Clustering methods are a hot topic in data analisys 2.3 K-Nearest Neighbors Suppose that a new aircraft is being made.

Let's personalize your content