Orchestrate Ray-based machine learning workflows using Amazon SageMaker

AWS Machine Learning Blog

SEPTEMBER 18, 2023

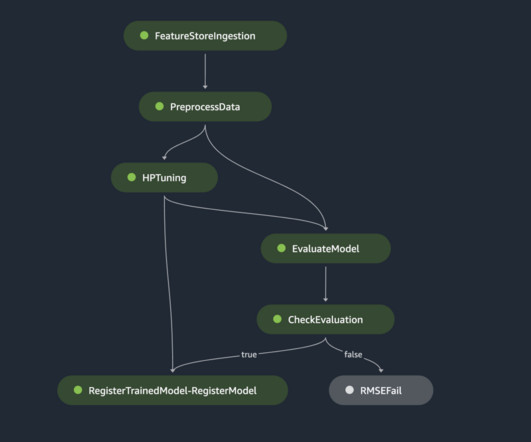

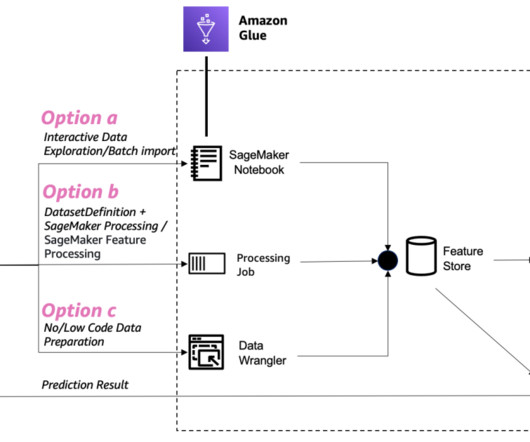

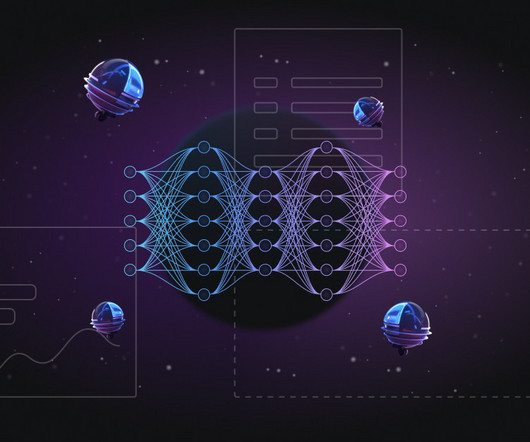

With Ray and AIR, the same Python code can scale seamlessly from a laptop to a large cluster. Amazon SageMaker Pipelines allows orchestrating the end-to-end ML lifecycle from data preparation and training to model deployment as automated workflows. You can specify resource requirements in actors too.

Let's personalize your content