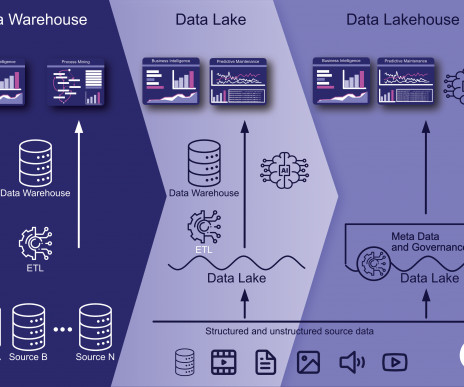

How a Delta Lake is Process with Azure Synapse Analytics

Analytics Vidhya

JULY 29, 2022

This article was published as a part of the Data Science Blogathon. The post How a Delta Lake is Process with Azure Synapse Analytics appeared first on Analytics Vidhya.

Let's personalize your content