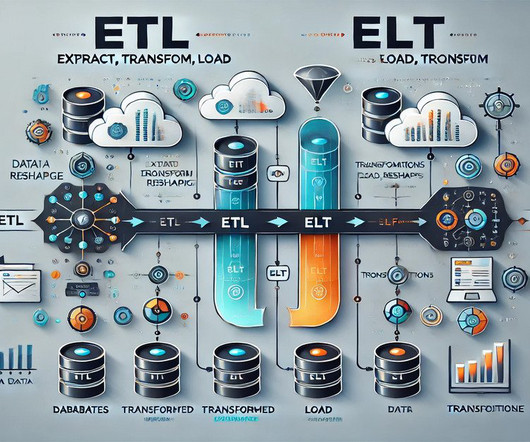

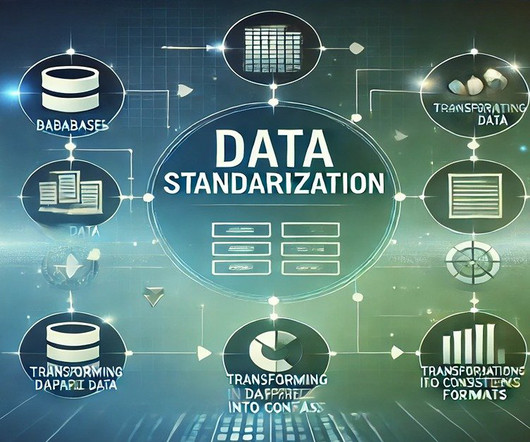

Data preprocessing

Dataconomy

APRIL 28, 2025

Importance of data preprocessing The role of data preprocessing cannot be overstated, as it significantly influences the quality of the data analysis process. High-quality data is paramount for extracting knowledge and gaining insights.

Let's personalize your content