Difference Between ETL and ELT Pipelines

Analytics Vidhya

MARCH 16, 2023

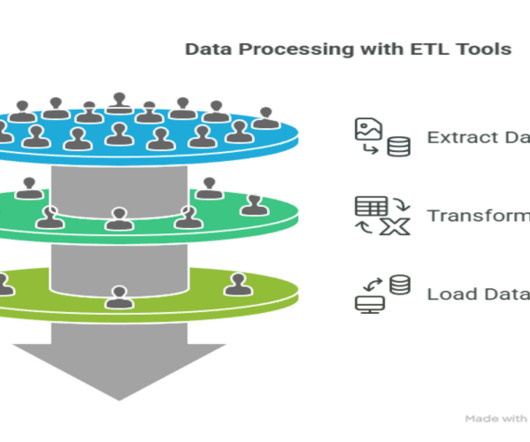

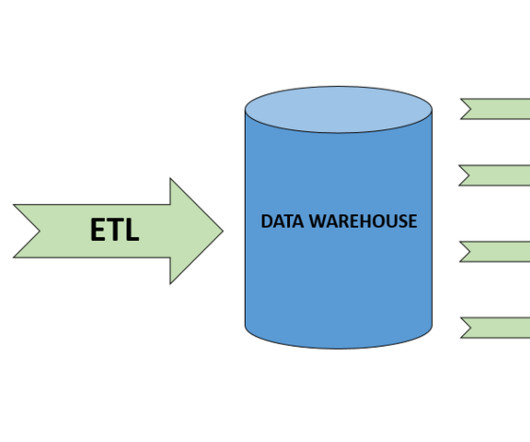

Introduction The data integration techniques ETL (Extract, Transform, Load) and ELT pipelines (Extract, Load, Transform) are both used to transfer data from one system to another.

Let's personalize your content