How to Assess Data Quality Readiness for Modern Data Pipelines

Dataversity

FEBRUARY 13, 2023

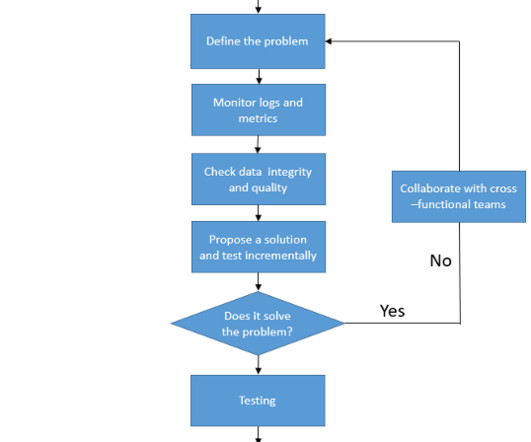

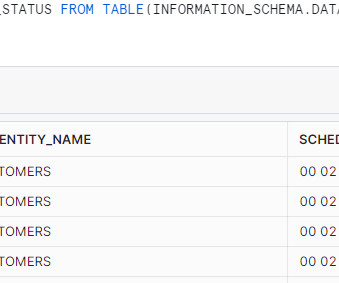

The key to being truly data-driven is having access to accurate, complete, and reliable data. In fact, Gartner recently found that organizations believe […] The post How to Assess Data Quality Readiness for Modern Data Pipelines appeared first on DATAVERSITY.

Let's personalize your content