Accelerate NLP inference with ONNX Runtime on AWS Graviton processors

AWS Machine Learning Blog

MAY 15, 2024

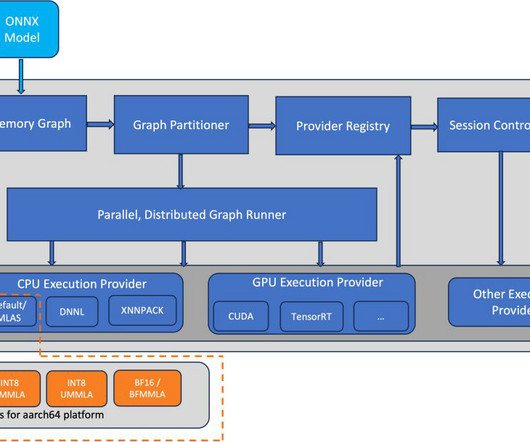

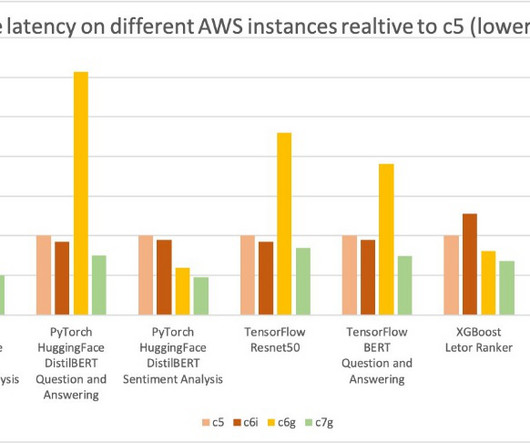

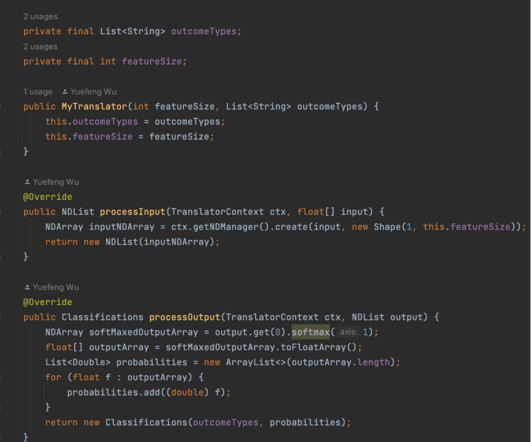

ONNX is an open source machine learning (ML) framework that provides interoperability across a wide range of frameworks, operating systems, and hardware platforms. AWS Graviton3 processors are optimized for ML workloads, including support for bfloat16, Scalable Vector Extension (SVE), and Matrix Multiplication (MMLA) instructions.

Let's personalize your content