Building a Data Pipeline with PySpark and AWS

Analytics Vidhya

AUGUST 3, 2021

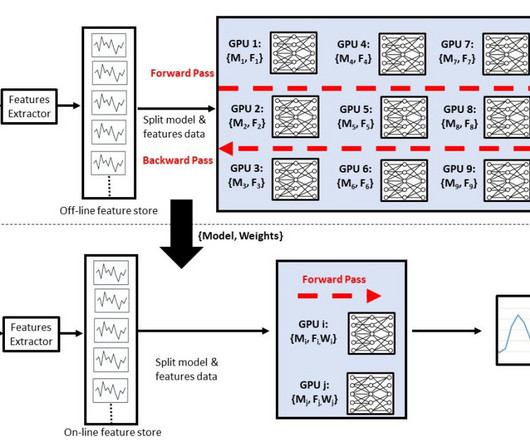

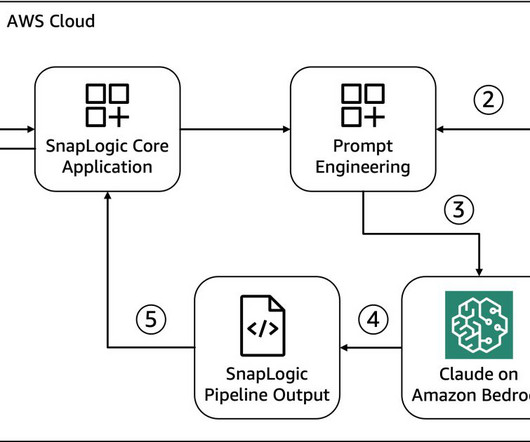

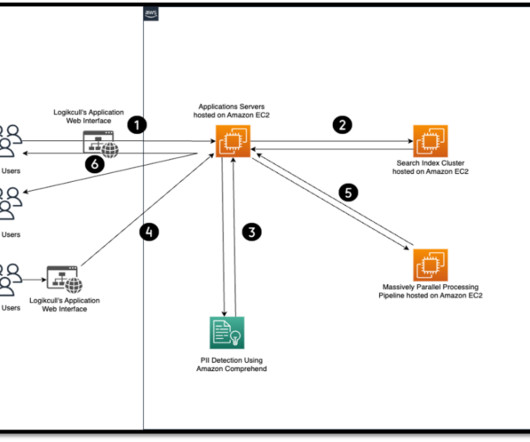

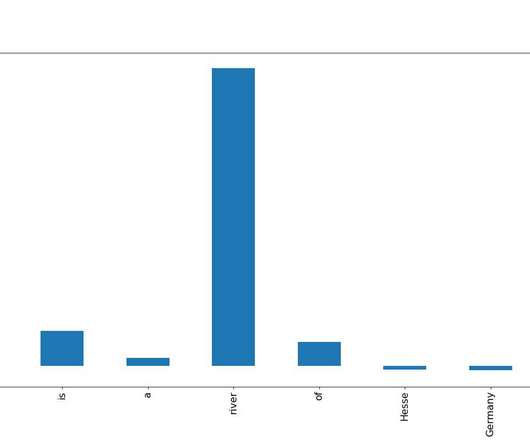

ArticleVideo Book This article was published as a part of the Data Science Blogathon Introduction Apache Spark is a framework used in cluster computing environments. The post Building a Data Pipeline with PySpark and AWS appeared first on Analytics Vidhya.

Let's personalize your content