Introduction to Partitioned hive table and PySpark

Analytics Vidhya

OCTOBER 28, 2021

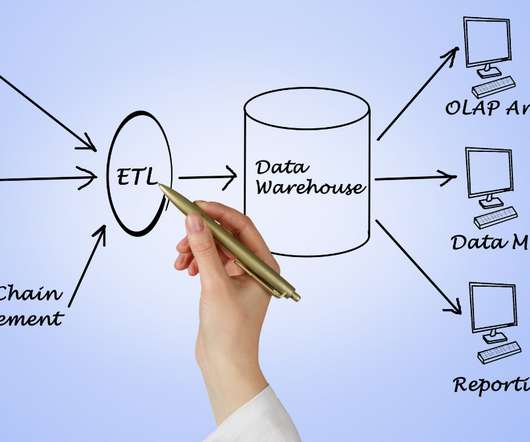

The official description of Hive is- ‘Apache Hive data warehouse software project built on top of Apache Hadoop for providing data query and analysis. Hive gives an SQL-like interface to query data stored in various databases and […].

Let's personalize your content