How IDIADA optimized its intelligent chatbot with Amazon Bedrock

AWS Machine Learning Blog

FEBRUARY 25, 2025

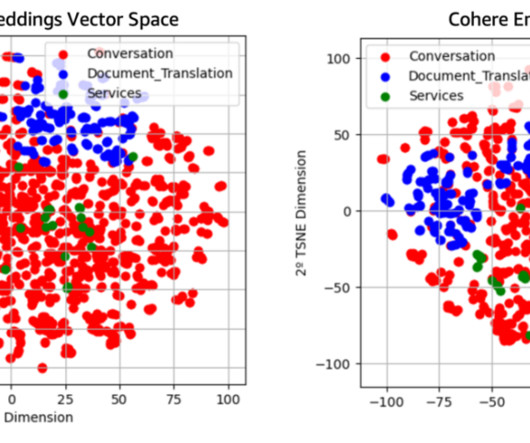

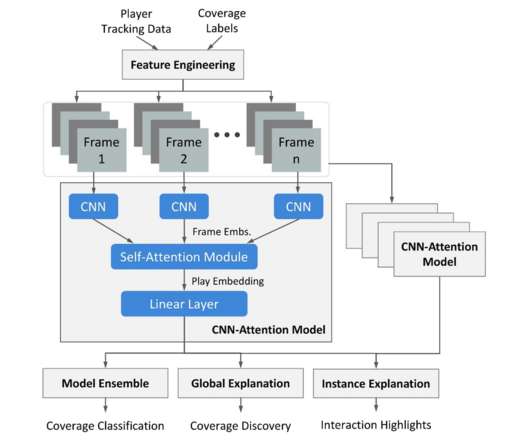

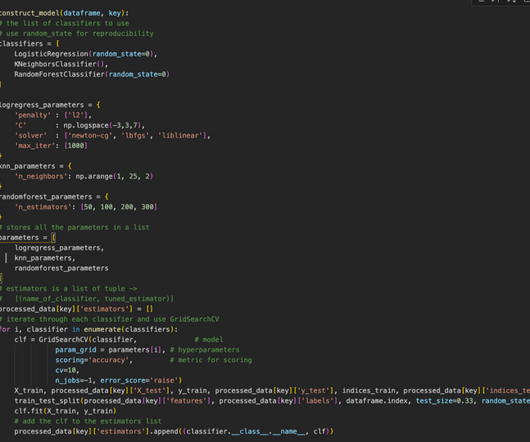

In 2021, Applus+ IDIADA , a global partner to the automotive industry with over 30 years of experience supporting customers in product development activities through design, engineering, testing, and homologation services, established the Digital Solutions department. Model architecture The model consists of three densely connected layers.

Let's personalize your content