Transforming financial analysis with CreditAI on Amazon Bedrock: Octus’s journey with AWS

AWS Machine Learning Blog

MARCH 10, 2025

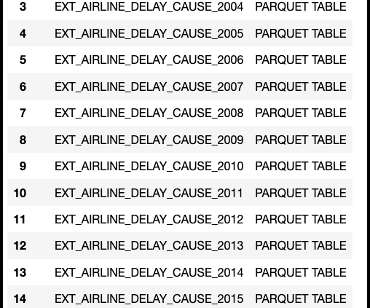

Founded in 2013, Octus, formerly Reorg, is the essential credit intelligence and data provider for the worlds leading buy side firms, investment banks, law firms and advisory firms. The integration of text-to-SQL will enable users to query structured databases using natural language, simplifying data access.

Let's personalize your content