Scale ML workflows with Amazon SageMaker Studio and Amazon SageMaker HyperPod

AWS Machine Learning Blog

DECEMBER 4, 2024

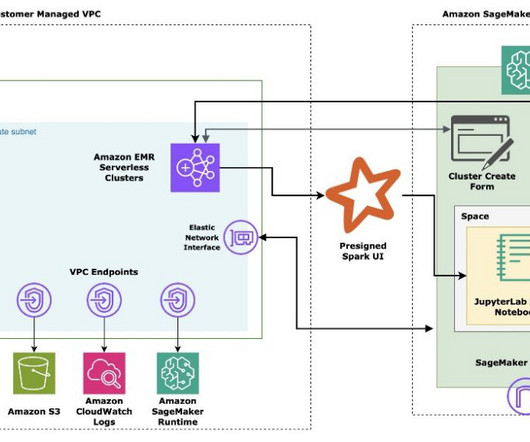

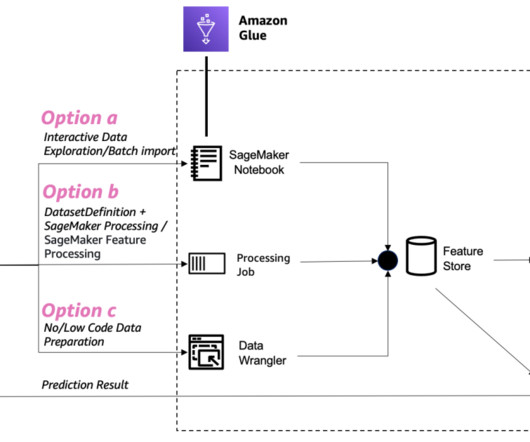

This integration addresses these hurdles by providing data scientists and ML engineers with a comprehensive environment that supports the entire ML lifecycle, from development to deployment at scale. This eliminates the need for data migration or code changes as you scale.

Let's personalize your content