Listening with LLM

Hacker News

JANUARY 13, 2024

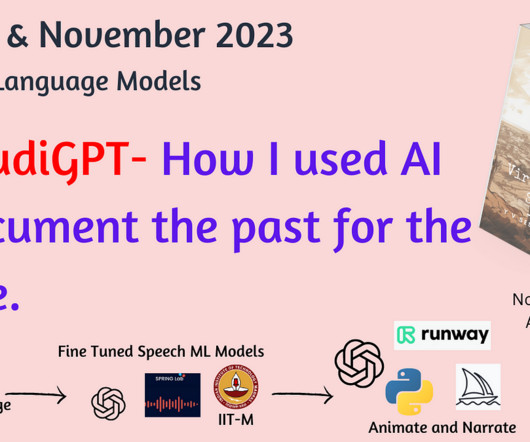

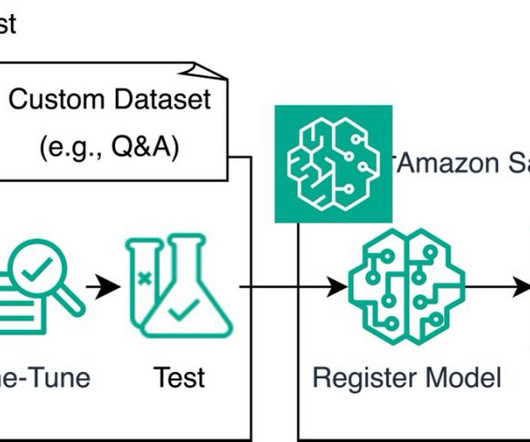

Overview This is the first part of many posts I am writing to consolidate learnings on how to finetune Large Language Models (LLMs) to process audio, with the eventual goal of being able to build and host a LLM able to describe human voices.

Let's personalize your content