Accelerating predictive task time to value with generative AI

Snorkel AI

AUGUST 17, 2023

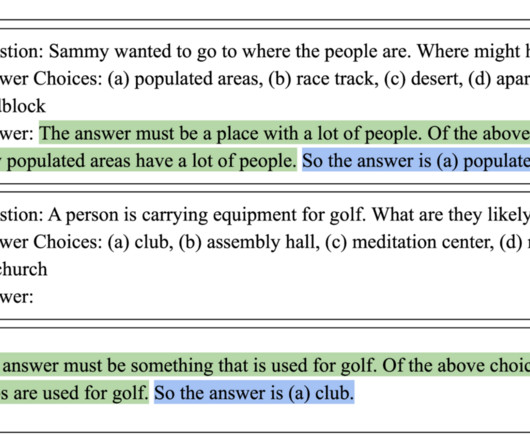

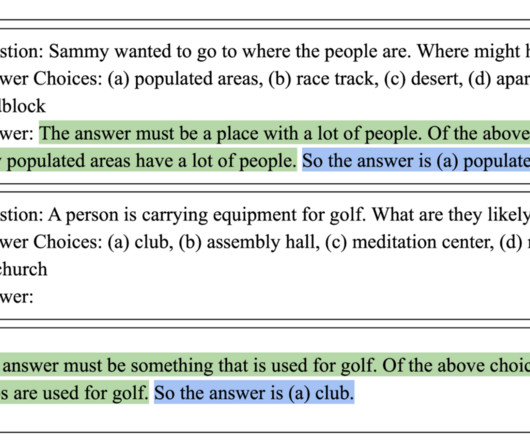

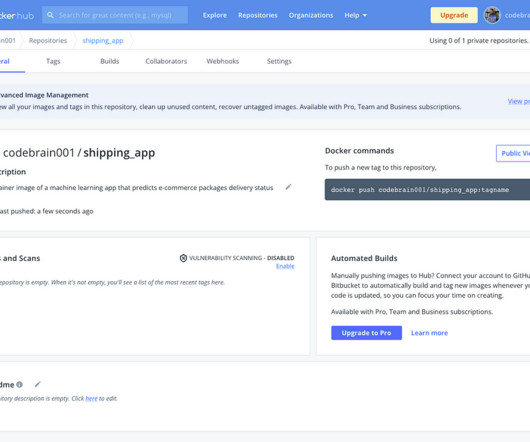

They can write poems, recite common knowledge, and extract information from submitted text. The latter will map the model’s outputs to final labels and significantly ease the data preparation process. To help bootstrap better, cheaper models. Generative artificial intelligence models offer a wealth of capabilities.

Let's personalize your content