The 40-hour LLM application roadmap: Learn to build your own LLM applications from scratch

Data Science Dojo

AUGUST 9, 2023

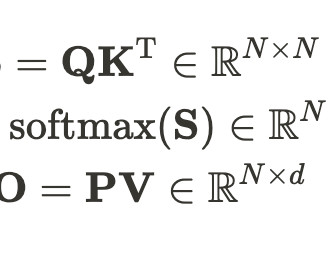

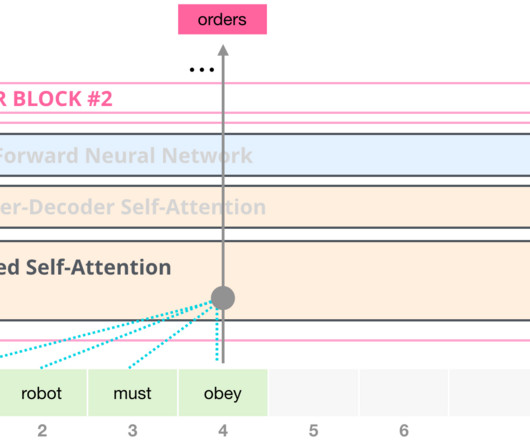

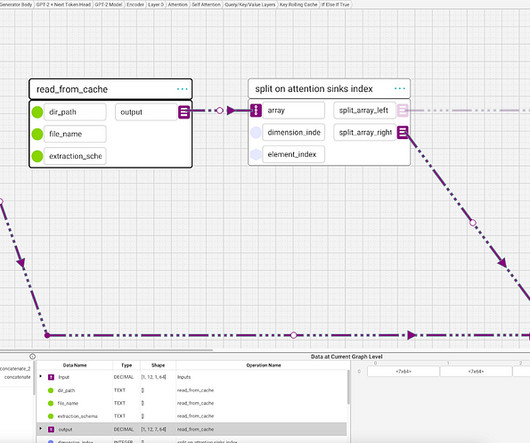

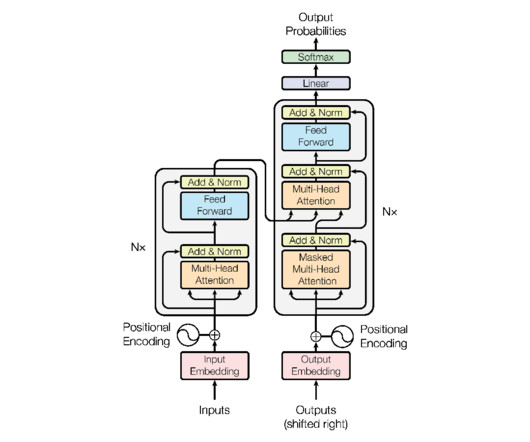

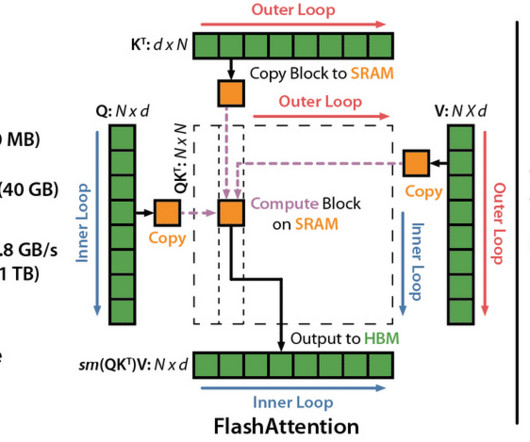

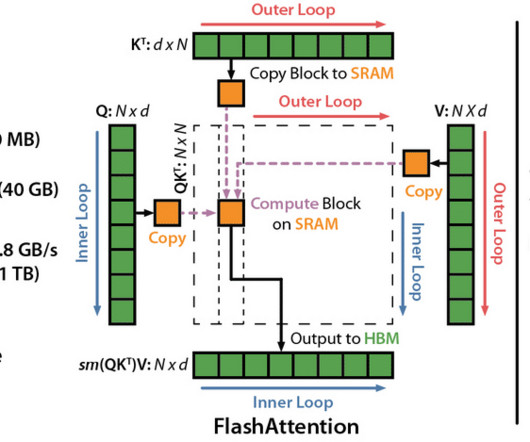

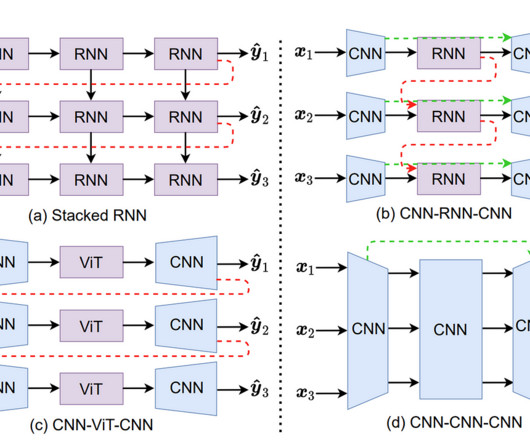

Attention mechanism and transformers: The attention mechanism is a technique that allows LLMs to focus on specific parts of a sentence when generating text. Transformers are a type of neural network that uses the attention mechanism to achieve state-of-the-art results in natural language processing tasks.

Let's personalize your content