Understanding the XLNet Pre-trained Model

Analytics Vidhya

MAY 16, 2024

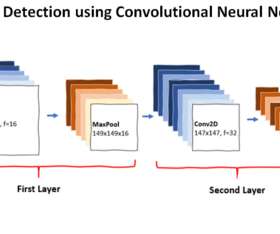

Introduction XLNet is an autoregressive pretraining method proposed in the paper “XLNet: Generalized Autoregressive Pretraining for Language Understanding ” XLNet uses an innovative approach to training. This means […] The post Understanding the XLNet Pre-trained Model appeared first on Analytics Vidhya.

Let's personalize your content