Training Logistic Regression with Cross-Entropy Loss in PyTorch

Machine Learning Mastery

DECEMBER 30, 2022

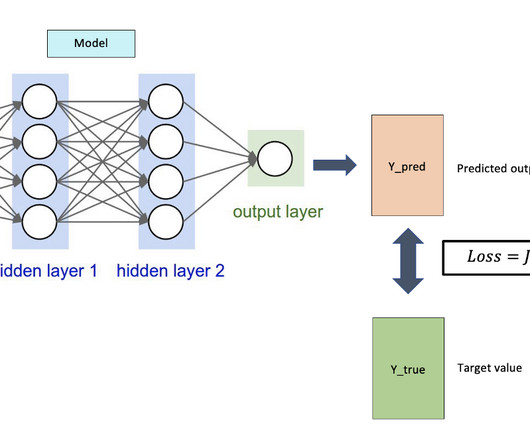

Last Updated on December 30, 2022 In the previous session of our PyTorch series, we demonstrated how badly initialized weights can impact the accuracy of a classification model when mean square error (MSE) loss is used. We noticed that the model didn’t converge during training and its accuracy was also significantly reduced.

Let's personalize your content