Improve LLM performance with human and AI feedback on Amazon SageMaker for Amazon Engineering

AWS Machine Learning Blog

APRIL 24, 2024

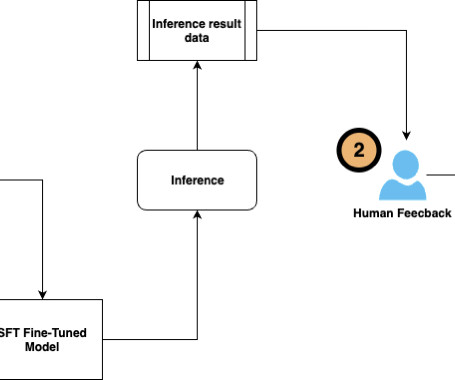

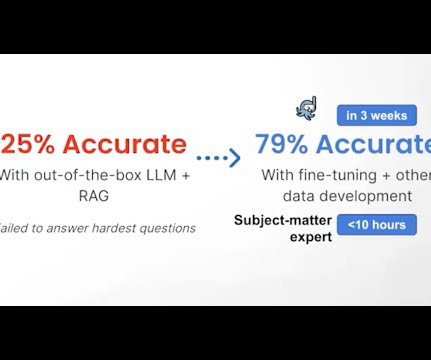

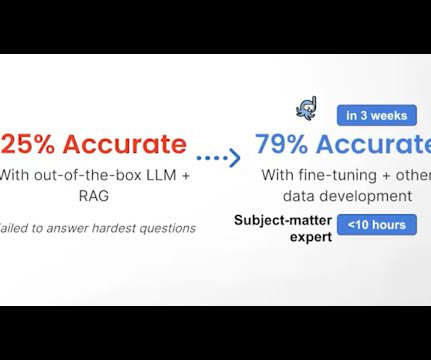

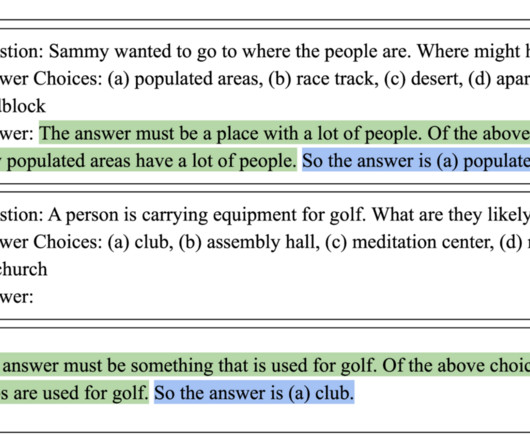

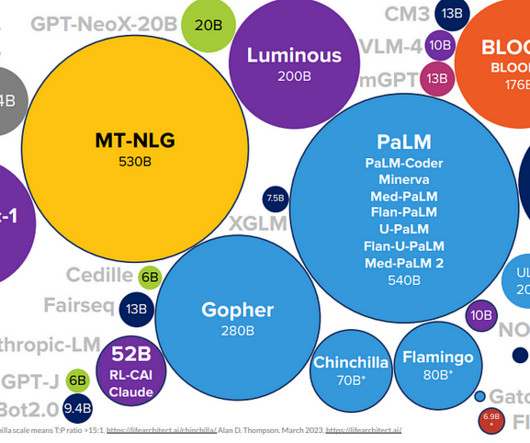

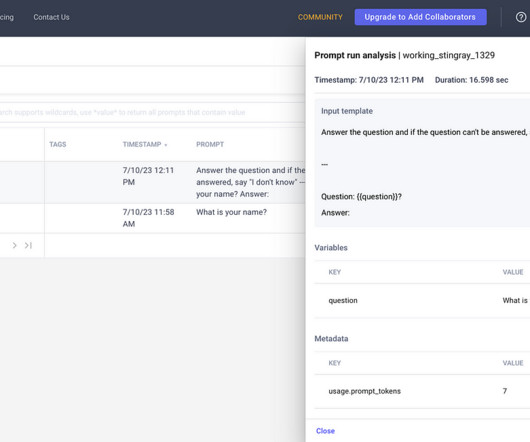

To increase training samples for better learning, we also used another LLM to generate feedback scores. We present the reinforcement learning process and the benchmarking results to demonstrate the LLM performance improvement. They can also provide a better answer to the question or comment on why the LLM response is not satisfactory.

Let's personalize your content